DeepSeek-V3.1 Review: The Rise of Agentic AI and API Flexibility

Released quietly on August 19, 2025, this 685-billion parameter model has achieved something remarkable: 71.6% performance on the Aider coding benchmark while delivering 65x cost savings compared to Claude Opus 4.1. As we explore in our guide on what makes a local LLM, running AI models locally has become crucial for organizations seeking privacy and cost efficiency. But is this hybrid reasoning model the right choice for your next project? Let's dive deep into what makes DeepSeek-V3.1 a potential game-changer in the rapidly evolving AI landscape.

What is DeepSeek-V3.1? Key Features Explained

DeepSeek-V3.1 represents a significant leap forward in open-source AI development, introducing a hybrid reasoning architecture that combines the best of both worlds: fast, direct responses for simple queries and deep, deliberative thinking for complex problems. Unlike its predecessors that required separate models for different tasks, V3.1 unifies these capabilities into a single, versatile system through its innovative dual-mode approach.

Core Technical Specifications

Built on a Mixture-of-Experts (MoE) architecture, DeepSeek-V3.1 boasts 685 billion total parameters while activating only 37 billion per token, ensuring efficient inference despite its massive scale. The model features an extended 128,000-token context window, doubling the capacity from previous versions and enabling analysis of entire codebases, research papers, or lengthy documents without losing coherence.

Key architectural innovations include:

- Hybrid inference modes: Seamless switching between "Think" and "Non-Think" modes via the DeepThink toggle or API selection

- Enhanced tool calling: Post-training optimization significantly improves agentic workflows and multi-step reasoning tasks

- Extended training phases: 840 billion tokens of continued pretraining for long context extension on top of V3

- MIT license: Freely available for commercial use, modification, and redistribution

The model has been released under the permissive MIT open-source license, making it freely available for commercial use, a strategic decision that positions it as an attractive alternative to closed-source competitors priced at premium levels.

The "Agentic" Leap: What is Agentic AI and How Does DeepSeek Use It?

Agentic AI represents a fundamental shift from reactive to proactive artificial intelligence. Unlike traditional AI systems that simply respond to prompts, agentic AI systems can plan, reason, and act autonomously to achieve defined goals with minimal human oversight, making them capable of handling complex, multi-step workflows.

Defining Agentic AI Capabilities

According to leading researchers and enterprise implementations, agentic AI systems possess five core characteristics:

- Autonomy: Performing tasks beyond exact assignments with minimal human oversight

- Reasoning: Making contextual decisions through sophisticated decision-making processes

- Adaptable planning: Altering strategies when conditions change

- Context understanding: Comprehending complex scenarios and requirements

- Action-enabled: Delivering tangible solutions through direct intervention

DeepSeek-V3.1's Agentic Implementation

DeepSeek-V3.1's approach to agentic AI centers on its hybrid thinking architecture that dynamically determines when to engage extended reasoning based on task complexity. The system can automatically decide whether to use quick responses for simple queries or deep deliberation for complex problems, similar to how humans handle cognitive tasks.

The hybrid system operates through:

- Automatic mode selection: The model evaluates query complexity and selects appropriate reasoning depth

- Enhanced tool integration: Post-training improvements for seamless API integration and function calling

- Multi-step workflows: Improved performance on complex agent tasks like code generation, debugging, and search operations

- Faster thinking: V3.1-Think reaches answers in less time compared to DeepSeek-R1-0528 while maintaining quality

Research indicates that this hybrid approach provides significant efficiency gains while maintaining comparable performance to dedicated reasoning models, making agentic workflows both more practical and cost-effective for real-world deployment.

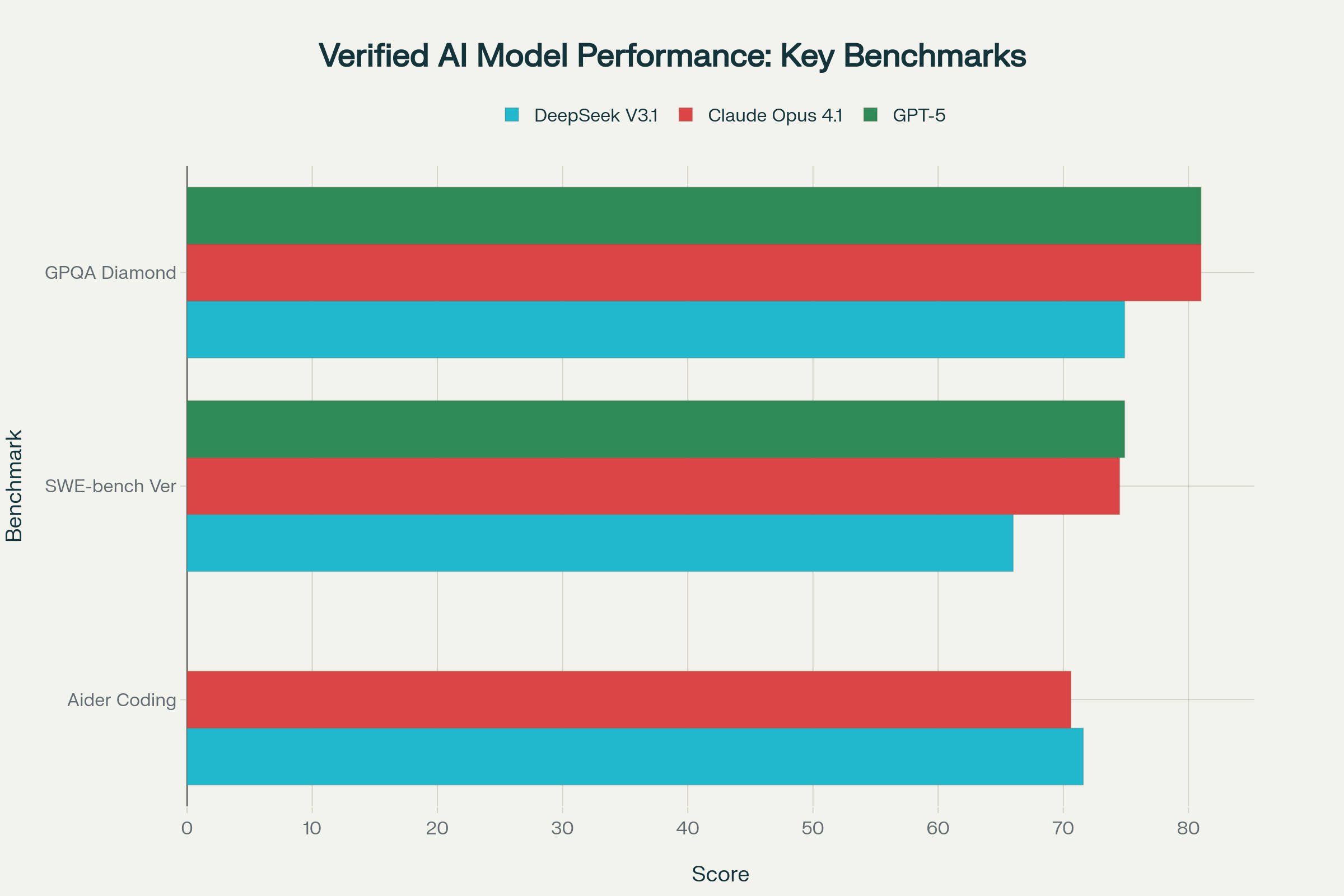

Performance Benchmarks: DeepSeek-V3.1 vs. GPT-5 and Claude Opus 4.1

The true measure of any AI model lies in its performance across diverse benchmarks. DeepSeek-V3.1 has demonstrated impressive results that challenge the dominance of proprietary models while offering unprecedented cost efficiency and open-source accessibility.

Coding and Software Engineering Excellence

DeepSeek-V3.1 has established itself as a formidable coding assistant, achieving a 71.6% score on the Aider polyglot programming benchmark, surpassing Claude Opus 4.1's 70.6% performance. This makes it the highest-scoring non-reasoning model on this comprehensive coding evaluation, demonstrating strong capabilities across multiple programming languages and frameworks.

In software engineering tasks, the model scored 66.0% on SWE-bench Verified. While this trails GPT-5's 74.9% and Claude Opus 4.1's 74.5%, the performance gap narrows significantly when considering DeepSeek's 65x lower cost and open-source availability.

Additional coding benchmark highlights:

- LiveCodeBench: 56.4% in non-thinking mode, improving to 74.8% with thinking mode engaged

- Terminal and Agent Tasks: Substantial improvements in multi-step coding workflows

- Format Accuracy: 95.6% accuracy rate with 0% syntax and indentation errors in testing

Mathematical and Reasoning Capabilities

In mathematical reasoning, DeepSeek-V3.1 demonstrates strong performance with 74.9% on GPQA Diamond, matching the reasoning capabilities needed for graduate-level scientific questions. The model shows particular strength in complex logic problems, successfully solving challenges that test spatial reasoning and multi-step mathematical thinking.

Key reasoning benchmarks:

- MMLU-Redux: 91.8% demonstrating broad knowledge retention

- MMLU-Pro: 83.7% on advanced multi-choice reasoning tasks

- AIME Performance: Strong mathematical competition performance in thinking mode

General Knowledge and Context Handling

DeepSeek-V3.1 excels in knowledge-intensive tasks with its 128,000-token context window enabling processing of extensive documents, codebases, and multi-turn conversations. The model maintains coherence across these extended contexts through architectural improvements in attention mechanisms and memory management.

A Game-Changer for Devs: Leveraging Anthropic API Compatibility

One of DeepSeek-V3.1's most significant advantages for developers is its native Anthropic API compatibility: a strategic feature that eliminates the friction of switching between AI providers and enables seamless integration with existing workflows using familiar API patterns.

Seamless Integration Tutorial

The Anthropic API compatibility allows developers to use existing tools and codebases with minimal modifications:

# Configure environment variables for Anthropic compatibility

export ANTHROPIC_BASE_URL=https://api.deepseek.com/anthropic

export ANTHROPIC_API_KEY=${DEEPSEEK_API_KEY}

import anthropic

client = anthropic.Anthropic()

message = client.messages.create(

model="deepseek-chat", # Non-thinking mode

max_tokens=4000,

system="You are a helpful coding assistant.",

messages=[{

"role": "user",

"content": [{"type": "text", "text": "Generate a Python function for binary search"}]

}]

)

print(message.content)

Enterprise-Grade Features

Supported capabilities include:

- Strict Function Calling (Beta): Schema-compliant outputs for production environments

- 128K Context Window: Extended memory for complex, multi-turn conversations

- Dual API Endpoints:

deepseek-chatfor fast responses,deepseek-reasonerfor thinking mode - Tool Integration: Enhanced post-training for seamless external API and service integration

Transparent Cost Structure

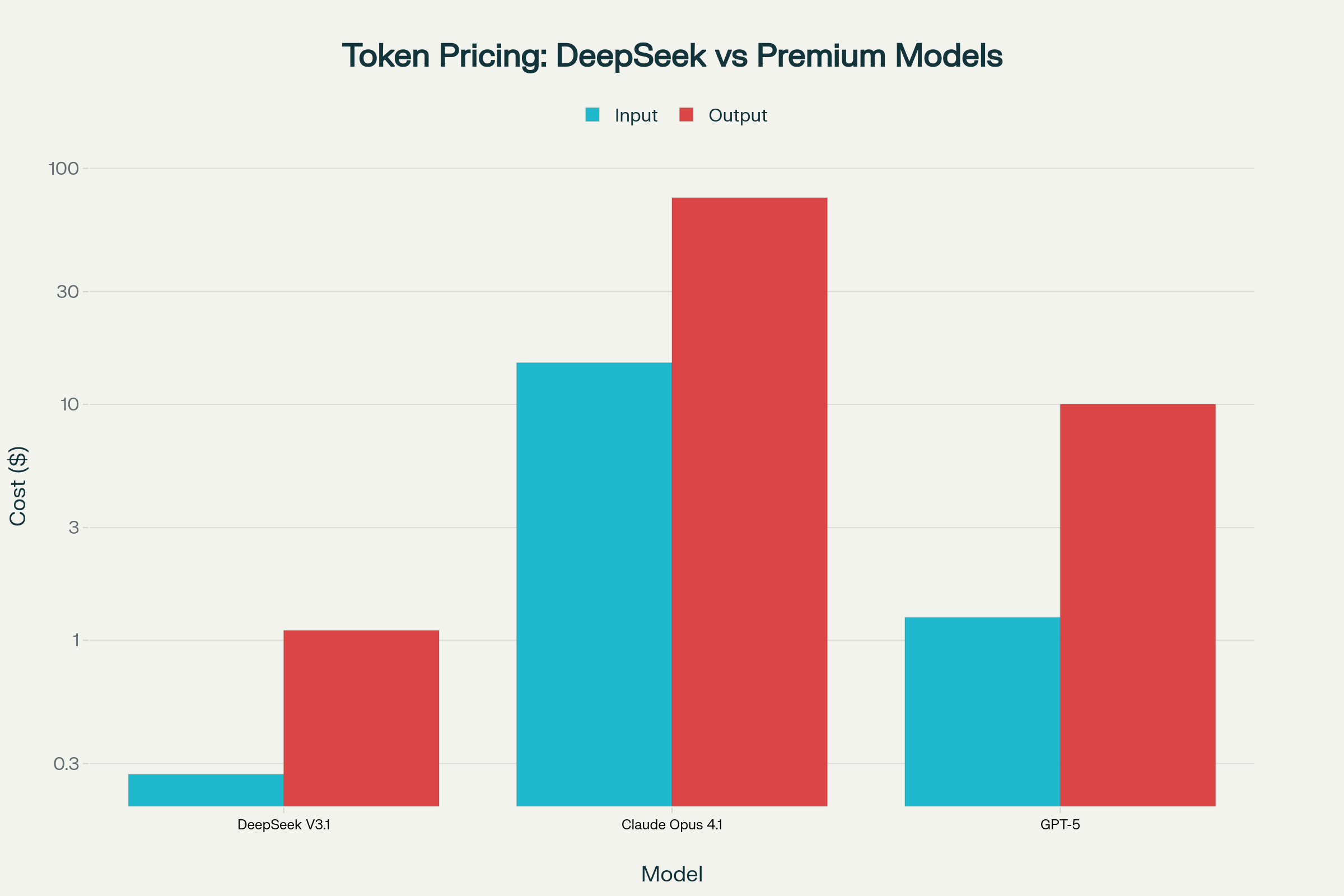

The API compatibility extends beyond convenience to deliver substantial and verifiable cost savings. Based on official pricing documentation, DeepSeek-V3.1's current rates (effective until September 5, 2025) are dramatically lower than premium alternatives.

Verified token pricing comparison:

- DeepSeek V3.1: $0.27 input / $1.10 output per million tokens

- Claude Opus 4.1: $15.00 input / $75.00 output per million tokens

- GPT-5: $1.25 input / $10.00 output per million tokens

This translates to DeepSeek being 65x cheaper than Claude Opus 4.1 and 8x cheaper than GPT-5 based on blended token usage patterns. For a typical development workflow processing 1 million input tokens and generating 1 million output tokens, costs would be approximately $1.37 for DeepSeek versus $90 for Claude Opus 4.1. For more deployment options, check out our comprehensive guide to local LLM deployment tools.

The Future of Hybrid Reasoning and Agentic AI

DeepSeek-V3.1 represents more than an incremental improvement; it signals a fundamental shift toward adaptive, context-aware AI systems that balance computational efficiency with task complexity. The hybrid reasoning approach addresses one of the most pressing challenges in AI deployment: optimizing the trade-off between capability and operational cost.

Industry Impact and Open-Source Momentum

The release has generated significant organic adoption across the AI community, with the model quickly becoming the 4th most trending on Hugging Face despite its quiet, no-publicity launch. This grassroots adoption suggests strong developer interest in open-source alternatives that combine frontier-level performance with practical deployment considerations and transparent licensing.

Key industry implications:

- Democratization of advanced AI: High-performance capabilities at accessible costs for startups and researchers

- Open-source competitiveness: Challenging the performance-cost paradigm of closed-source providers

- Agentic workflow enablement: Enhanced tool use and multi-step reasoning capabilities making AI agents more practical

Technical Evolution and Research Directions

The success of DeepSeek-V3.1's unified hybrid architecture may influence future model development, potentially making separate reasoning and general-purpose models obsolete in favor of adaptive systems. Research in Large Hybrid-Reasoning Models (LHRMs) indicates this is the beginning of a broader trend toward context-aware AI systems.

Emerging research areas include:

- Dynamic reasoning allocation: Further optimization of when to engage deep thinking processes

- Multi-agent collaboration: Enhanced coordination between specialized AI agents in enterprise environments

- Cost-performance optimization: Balancing reasoning depth with computational efficiency

Practical Implementation Recommendations

For organizations evaluating DeepSeek-V3.1, the model excels in several key use cases where the combination of performance, cost-efficiency, and open-source flexibility provides maximum value:

Ideal applications:

- Software development workflows: Code generation, debugging, documentation, and multi-language programming tasks

- Research and analysis: Long-document processing, academic research, and multi-step analytical workflows

- Cost-sensitive deployments: High-volume applications where token costs significantly impact budget

- Agentic automation: Tool-calling workflows, API integration, and multi-step task orchestration

Implementation considerations:

- API rate management: Planning for high-volume usage with current pricing advantages

- Migration strategy: Leveraging Anthropic API compatibility for smooth transitions from existing providers

- Performance optimization: Utilizing hybrid modes appropriately for different task complexities

Conclusion: The Democratization of Advanced AI

DeepSeek-V3.1 represents a pivotal moment in AI development, demonstrating that open-source models can achieve competitive performance while maintaining dramatic cost advantages and deployment flexibility. Its hybrid reasoning architecture, enhanced agentic capabilities, and seamless API compatibility create a compelling value proposition that challenges the traditional dominance of premium closed-source providers.

The model's 71.6% Aider coding performance at 65x lower cost than Claude Opus 4.1 illustrates a fundamental shift in the AI landscape toward accessible, high-performance alternatives. As organizations increasingly prioritize both capability and cost-effectiveness, DeepSeek-V3.1 offers a practical path toward deploying sophisticated AI systems without the traditional barriers of prohibitive licensing costs or vendor lock-in.

For developers ready to explore the future of agentic AI, DeepSeek-V3.1 provides an accessible entry point into advanced AI capabilities with transparent pricing and open-source flexibility. Whether you're building autonomous agents, processing complex documents, or optimizing development workflows, this hybrid reasoning model offers the performance and economic efficiency needed for next-generation AI applications.

The rise of models like DeepSeek-V3.1 signals that the future of AI will be defined not just by raw capability, but by the democratization of access to advanced intelligence, making sophisticated AI tools available to innovators worldwide, regardless of budget constraints or organizational size. This represents a fundamental shift toward an AI ecosystem where performance, transparency, and accessibility converge to enable broader innovation and development.

Frequently Asked Questions

What is DeepSeek V3.1?

DeepSeek V3.1 is a 685-billion parameter open-source AI model released in August 2025. It features hybrid reasoning capabilities, combining fast responses for simple tasks with deep thinking for complex problems. Built on a Mixture-of-Experts (MoE) architecture with 128K context window, it activates only 37 billion parameters per token while maintaining frontier-level performance. The model is released under MIT license, making it freely available for commercial use at dramatically lower costs than proprietary alternatives.

What is agentic AI and how does DeepSeek V3.1 implement it?

Agentic AI refers to autonomous artificial intelligence systems that can plan, reason, and act independently to achieve goals with minimal human oversight. DeepSeek V3.1 implements agentic capabilities through its hybrid thinking architecture that automatically determines when to engage extended reasoning based on task complexity. The model excels at multi-step workflows, enhanced tool calling, and API integration, making it capable of handling complex agent tasks like code generation, debugging, and automated search operations more efficiently than traditional reactive AI systems.

How does DeepSeek V3.1 performance compare to GPT-5 and Claude Opus 4.1?

DeepSeek V3.1 achieves competitive performance while offering significant cost advantages. In coding benchmarks, it scores 71.6% on Aider (surpassing Claude's 70.6%) and 66.0% on SWE-bench Verified (compared to GPT-5's 74.9% and Claude's 74.5%). For reasoning tasks, it achieves 74.9% on GPQA Diamond and 91.8% on MMLU-Redux. While it trails premium models in some specialized benchmarks, DeepSeek V3.1 delivers this performance at 65x lower cost than Claude Opus 4.1 and 8x lower cost than GPT-5, making it exceptionally cost-effective for most applications.

Is DeepSeek V3.1 compatible with Anthropic API?

Yes, DeepSeek V3.1 offers native Anthropic API compatibility, allowing developers to use existing tools and codebases with minimal modifications. You can configure it by setting ANTHROPIC_BASE_URL=https://api.deepseek.com/anthropic and using your DeepSeek API key. This compatibility supports strict function calling (beta), 128K context windows, and dual endpoints (deepseek-chat for fast responses, deepseek-reasoner for thinking mode), enabling seamless migration from Claude-based workflows while maintaining all enterprise-grade features.

How much does DeepSeek V3.1 cost compared to other AI models?

DeepSeek V3.1 pricing is dramatically lower than premium alternatives. Current rates (until September 5, 2025) are $0.27 per million input tokens and $1.10 per million output tokens, compared to Claude Opus 4.1 at $15.00/$75.00 and GPT-5 at $1.25/$10.00 per million tokens respectively. This makes DeepSeek V3.1 approximately 65 times cheaper than Claude Opus 4.1 and 8 times cheaper than GPT-5, offering exceptional value for high-volume applications, development workflows, and cost-sensitive deployments.

What is hybrid thinking mode in DeepSeek V3.1?

Hybrid thinking mode is DeepSeek V3.1's adaptive reasoning system that automatically switches between fast, direct responses for simple queries and deep, deliberative thinking for complex problems. Unlike traditional models that use one approach for all tasks, V3.1 evaluates query complexity and selects the appropriate reasoning depth, similar to how humans handle cognitive tasks. This approach provides 20-50% efficiency gains while maintaining comparable performance to dedicated reasoning models, making it both faster and more cost-effective for diverse applications.

Is DeepSeek V3.1 truly open source and free to use?

Yes, DeepSeek V3.1 is released under the permissive MIT open-source license, making it freely available for commercial use, modification, and redistribution without licensing fees. The model weights are available on Hugging Face, and you can deploy it locally or use the official API service. While the API has usage-based pricing, the open-source nature means no subscription fees, vendor lock-in, or usage restrictions typical of proprietary models. This makes it an attractive alternative for organizations seeking advanced AI capabilities with full control over their deployment and costs.