The Complete Guide to LLM Quantization

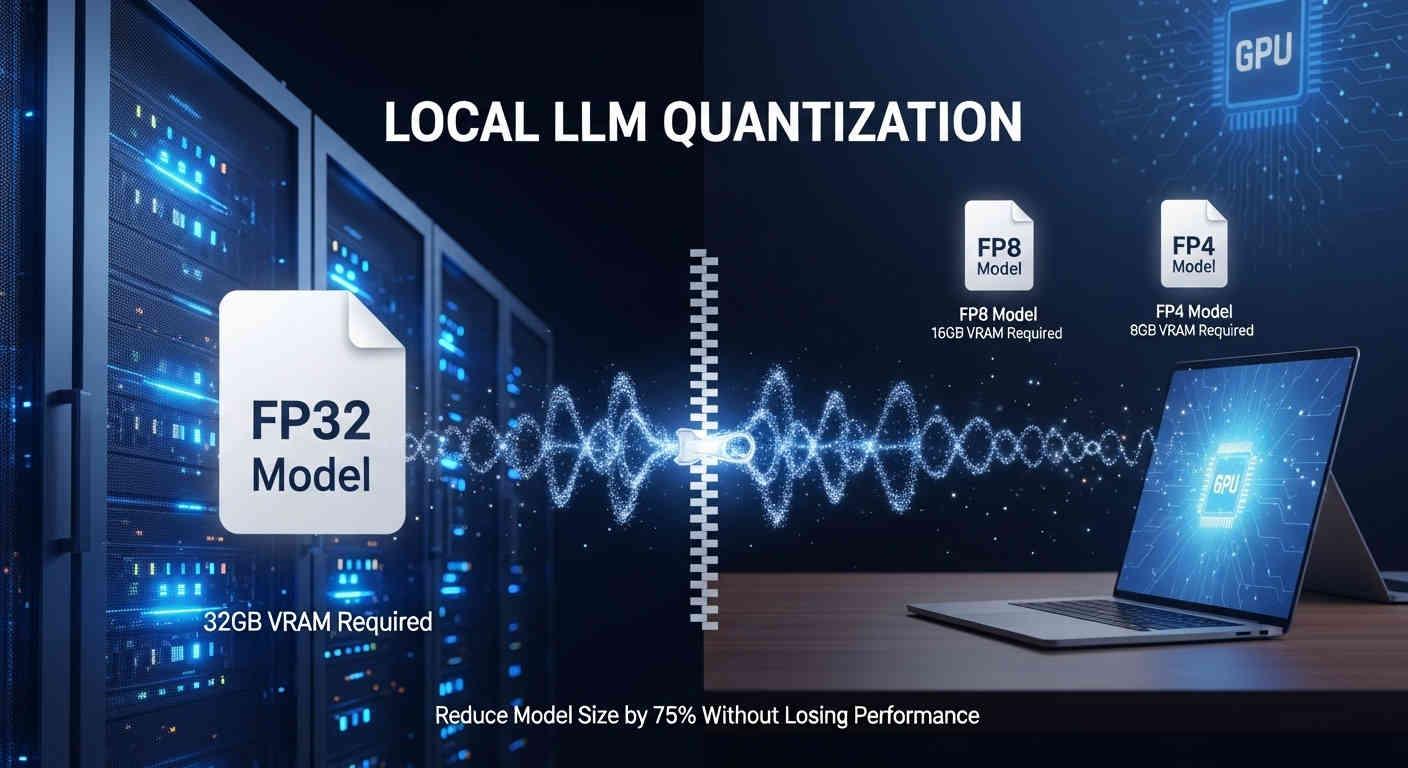

Large Language Models have revolutionized AI, but their massive size creates deployment challenges. LLM quantization solves this by reducing model size by up to 75% while maintaining performance. Whether you're a developer trying to run models locally, a researcher optimizing inference, or a business evaluating AI deployment options, this guide covers everything from basic concepts to advanced implementation strategies.

What is LLM Quantization?

LLM quantization is a compression technique that reduces the numerical precision of model weights and activations from high-precision formats (like 32-bit floats) to lower-precision representations (like 8-bit or 4-bit integers). Think of it as converting a high-resolution image to a smaller file size while preserving most visual quality.

The Core Concept: Precision Reduction

Instead of storing each model parameter as a 32-bit floating-point number requiring 4 bytes of memory, quantization converts these to smaller representations. For example:

- 32-bit float: 4 bytes per parameter

- 8-bit integer: 1 byte per parameter (75% memory reduction)

- 4-bit integer: 0.5 bytes per parameter (87.5% memory reduction)

This dramatic size reduction enables powerful models to run on consumer hardware, reducing costs and democratizing access to AI capabilities.

Comparison of Numeric Data Types (FP32, FP16, BF16, INT8, INT4)

This stacked bar chart visualizes the bit allocation for common data types in computing and AI. Hover over each bar for detailed explanations on range, precision, use cases, and bit breakdowns. These formats trade off precision/range for efficiency in memory and computation.

Note: Floating-point types (FP/BF) separate bits for sign, exponent (range), and mantissa (precision). Integer types use all bits for discrete values. Lower-bit formats enable faster, more efficient AI models but may lose accuracy.

LLM Model Size Calculator

Select the number of parameters and quantization level to estimate the model size and memory requirements.

Approximate VRAM Requirements:

Batch Size 1

19.56 GB

Batch Size 8

52.15 GB

Batch Size 32

130.39 GB

Note: Memory requirements are approximate and include additional overhead for activations, gradients, and temporary buffers.

LLM Weight Quantization Visualizer

How 4-bit quantization maps high-precision weights to a limited set of values.

*Note: All error metrics (Avg Error and Max Error) are calculated based on 200 simulated sample weights. Click "New Data" to generate different samples.

Understanding Quantization Mathematics (Simplified)

You might encounter the mathematical formula for quantization, which can seem confusing. The basic idea is simple: quantization is the process of reducing the precision of numbers to make a model smaller and faster. It's a trade-off that sacrifices a small amount of accuracy for significant gains in efficiency.

There are two primary ways this is achieved. The first is floating-point quantization, which converts numbers to a less precise floating-point format, like moving from 32-bit floats (FP32) to 16-bit floats (FP16 or BFloat16). Think of this as rounding a number like 3.14159265 to 3.14. This is a direct conversion that works exceptionally well on modern GPUs, which are highly optimized for these formats.

The second method is integer quantization, which maps a range of floating-point numbers to a set of integers (like INT8). This process is more involved. First, you determine the minimum and maximum values of your weights. Then, you calculate a scale factor—a conversion ratio that maps this floating-point range to the integer's range (e.g., -128 to 127). You also determine a zero-point, an integer offset that ensures the floating-point value 0.0 is represented perfectly. Each weight is then converted to its closest integer. To use the model, you must store this scale factor and zero-point, as they are the keys needed to translate the integers back into their approximate original values during inference.

So, why use integers at all? Because integer math is incredibly fast and power-efficient across almost all hardware, especially CPUs and specialized edge devices. It allows for models that are even smaller and faster than what 16-bit floats can achieve, making it possible to run large AI models on everyday hardware.

Different quantization methods like GPTQ, AWQ, and GGUF are simply advanced techniques that apply these core principles more intelligently. They focus on finding the optimal scale factors to minimize accuracy loss, making highly compressed models surprisingly effective.

Activation Quantization

While weight quantization reduces stored parameters, activation quantization targets the intermediate outputs (activations) during inference. Activations are dynamic and can have outliers, making them trickier to quantize.

- Process: Similar to weights, activations are scaled and mapped to lower precision (e.g., FP16 to INT8 using absmax or per-channel scaling).

- Challenges: Outliers in activations can cause significant error; methods like AWQ analyze activation patterns to protect critical ranges.

- Benefits: Reduces memory bandwidth and speeds up computation, especially on CPUs; can cut inference energy by 20-40%.

- Example: In AWQ, activation-aware scaling ensures salient weights (those with high activation impact) retain higher precision.

Formula Extension for Activations (Asymmetric):

For activation tensor A: scale_a = (max_A - min_A) / (2^b - 1), where b is bits.

q(A) = round((A - min_A) / scale_a)

Dequant: Â = min_A + q(A) * scale_a

Tip: Activation quantization is often combined with weight quantization for full efficiency, but requires calibration data to handle variance.

LLM Weights Quantization

Explore how neural networks are compressed from 32-bit to 8-bit precision

Step 1 of 7

Welcome! This demo shows how neural network quantization works.

Best for balanced data around zero

8× compression

Quantization Formulas

How LLM Quantization Works

The quantization process transforms your model through several key steps:

1. Weight Analysis and Grouping

Modern quantization techniques analyze weight distributions and group similar values together. Instead of quantizing each weight individually, block-wise quantization groups 32-128 consecutive weights and applies the same scaling factor. This preserves more accuracy than global quantization.

2. Range Mapping and Scaling

The algorithm identifies the minimum and maximum values within each block, then calculates optimal scaling factors to map floating-point ranges to integer representations.

3. Integer Conversion and Storage

Original weights are converted to their quantized integer representations along with the scaling factors needed for reconstruction during inference.

4. Runtime Dequantization

During model inference, quantized integers are converted back to approximate floating-point values using the stored scaling factors.

Major LLM Quantization Methods

Illustration showing the impact of different precision levels on quantization quality and performance

GPTQ (GPU-Optimized Quantization)

GPTQ uses sophisticated mathematical optimization to minimize quantization errors. It analyzes each layer's importance and applies quantization accordingly, making it ideal for GPU deployments.

Key Advantages:

- Excellent GPU performance (5x faster than GGUF)

- Supports 2-8 bit quantization levels

- Maintains good accuracy (95-98% retention)

- One-shot quantization without retraining

Best For: Server deployments, cloud inference, high-throughput applications

AWQ (Activation-Aware Weight Quantization)

AWQ protects the most important weights by analyzing activation patterns rather than just weight magnitudes. This selective approach preserves critical model components at higher precision.

Key Advantages:

- Superior accuracy retention (97-99%)

- Faster inference than GPTQ on compatible hardware

- Excellent performance on instruction-tuned models

- Minimal calibration data requirements

Best For: Production systems prioritizing accuracy, instruction-following models

GGUF (CPU-Optimized Universal Format)

GGUF represents the evolution of llama.cpp's quantization approach, optimized for CPU and hybrid deployments. It provides exceptional cross-platform compatibility and ease of use.

Key Advantages:

- Optimized for consumer hardware and edge devices

- Broad platform compatibility (Windows, Linux, macOS)

- Simple deployment and management

- Various quantization levels (Q2_K to Q8_0)

Best For: Local deployment, consumer hardware, edge computing

Emerging Quantization Methods in 2025

As of 2025, new techniques push boundaries further:

BitNet (1.58-bit Quantization):

- Uses ternary weights (-1, 0, 1) for extreme compression.

- Advantages: Up to 93% size reduction; energy-efficient on custom hardware.

- Best For: Edge devices; maintains 90-95% accuracy on large models like Llama.

SmoothQuant:

- Handles activation outliers by smoothing distributions before quantization.

- Advantages: Better for low-bit (INT8) without accuracy drop; integrates with PTQ.

- Best For: Models with spiky activations, like OPT or BLOOM.

EXL2 (ExLlamaV2 Format):

- GPU-optimized with mixed-precision; faster than GPTQ on NVIDIA.

- Advantages: Supports 2-8 bits; hybrid kernels for speed.

- Best For: High-throughput GPU inference.

Tools: qMeter for automated benchmarking; Unsloth Dynamic 2.0 for GGUF enhancements; Hugging Face Optimum for unified quantization.

Types of Quantization Approaches: PTQ vs QAT

Quantization methods can be categorized based on when they are applied during the model's lifecycle.

Post-Training Quantization (PTQ):

- Applies quantization after the model is fully trained.

- Advantages: Fast and requires no additional training; minimal resources needed.

- Disadvantages: May lead to higher accuracy loss, especially at low bits (e.g., 4-bit or below).

- Examples: GPTQ, AWQ, and GGUF are primarily PTQ-based.

- Best for: Quick deployment on pre-trained models without access to training data.

Quantization-Aware Training (QAT):

- Integrates quantization into the training or fine-tuning process, simulating low-precision during optimization.

- Advantages: Better accuracy retention (up to 2-5% higher than PTQ in benchmarks); recovers performance at extreme low bits.

- Disadvantages: Requires training resources and data; more time-intensive.

- Examples: Used in frameworks like PyTorch's QAT flow or NVIDIA's tools for LLMs.

- Best for: Custom models where high accuracy is critical, such as fine-tuned instruction models.

Comparison Table

| Aspect | PTQ | QAT |

|---|---|---|

| Training Required | No | Yes (fine-tuning) |

| Accuracy Retention | Good (95-98%) | Excellent (97-99.5%) |

| Speed of Application | Fast | Slower |

| Resource Needs | Low | High |

In practice, start with PTQ for most cases, but switch to QAT for 2-4 bit quantization on sensitive tasks.

Understanding Quantization Naming Conventions in GGUF

Quantization in machine learning models, particularly for large language models (LLMs) like those in the GGUF format used by tools such as llama.cpp, reduces model size and inference speed by lowering the precision of weights. The seemingly cryptic names-full of letters and numbers-aren't random; they encode key details about the quantization process. Decoding them helps users select the right variant for their hardware, balancing file size, memory usage, and performance quality.

GGUF Naming Structure: Q{Bits}{Method}{Size}

The core format is Q{Bits}{Method}{Size}, where:

- Q: Stands for Quantization, signaling the compressed format.

- Bits: The number of bits per weight (e.g., 4, 5, 8), directly impacting compression ratio - lower bits mean smaller files but potential accuracy trade-offs.

- Method: Describes the quantization technique, often involving optimization for better fidelity.

- Size: Indicates block size for processing weights, affecting granularity and efficiency.

Key Examples:

- Q4_K_M: Uses 4-bit precision with K-means clustering optimization (K) to group similar weights, applied to Medium-sized blocks. This minimizes distortion in dense layers, ideal for GPUs with limited VRAM.

- Q8_0: Employs 8-bit precision via basic linear (uniform) quantization, with no advanced method (0). It's straightforward, preserving near-full precision for tasks needing high fidelity, like fine-tuning.

- Q3_K_S: Applies 3-bit precision with K-means optimization on Small blocks, enabling ultra-aggressive compression for edge devices or massive models, though it risks more quality degradation in nuanced outputs.

Other variants include Q2_K (2-bit K-means for extreme compression) or Q6_K (6-bit for finer balance), with suffixes like _S (Small), _M (Medium), or _L (Large) tailoring to layer types - e.g., attention heads vs. feed-forward networks.

Common Quantization Levels: Trade-offs and Use Cases

Selecting a level depends on your setup: desktop GPUs favor Q4/Q5 for speed, while mobile/CPU setups lean toward Q3/Q2. Here's a deeper look:

- Q8_0: Offers minimal quality loss compared to the original FP16/FP32 model - often indistinguishable in casual chats. It's a safe starting point for experimentation, with file sizes ~50% smaller. Drawback: Higher memory footprint (e.g., a 7B model at ~7GB).

- Q5_K_M: Strikes a balanced sweet spot, blending 5-bit K-means efficiency with medium blocks for ~70% size reduction. Accuracy holds up well for creative writing or coding tasks, making it versatile for mid-range hardware without noticeable hallucinations.

- Q4_K_M: The go-to "popular choice" for most users, achieving ~75% compression via smart K-optimization. It powers efficient local inference on consumer GPUs (e.g., RTX 3060), with only slight perplexity increases - perfect for daily AI assistants or RAG pipelines.

- Q3_K_S: Delivers maximum compression (up to 85% smaller files) using small-block K-means, suiting low-RAM scenarios like laptops or servers hosting multiple instances. Quality remains acceptable for broad queries but may falter in precision-heavy domains like math or translation; test iteratively.

In practice, start with Q4_K_M and benchmark via tools like llama-bench. Remember, quantization is lossy - always validate outputs against the full-precision baseline. This system empowers efficient deployment without sacrificing too much intelligence.

Performance Impact: Speed, Size, and Quality Trade-offs

Memory Savings

Quantization provides substantial storage and memory benefits:

- 8-bit: 75% size reduction compared to original

- 4-bit: 87.5% size reduction

- 3-bit: 90.6% size reduction

- 2-bit: 93.75% size reduction

Inference Speed Improvements

Real-world benchmarks show significant performance gains:

- 8-bit quantization: 50-100% faster inference

- 4-bit quantization: 100-200% speed improvement

- GPU-optimized methods: Up to 300% faster than full precision

Environmental and Cost Impacts

Quantization not only boosts efficiency but also reduces environmental footprint:

- Energy Savings: 4-bit quantization can cut inference energy by 45-80%, lowering carbon emissions in data centers.

- Cost Reduction: Smaller models use cheaper hardware (e.g., 8GB VRAM vs 24GB), saving 30-50% on cloud bills (AWS/GCP).

- Sustainability: UNESCO reports up to 90% energy reduction with optimizations; aligns with green AI initiatives.

- Benchmarks: Post-quantization, models like Llama reduce CO2 equivalent by 40% per inference.

Table: Impact Metrics

| Precision | Energy Reduction | Cost Savings (Cloud/Hr) |

|---|---|---|

| 8-bit | 50% | 30% |

| 4-bit | 70% | 40% |

| 2-bit | 80% | 50% |

Recommendation: Factor in energy metrics for large-scale deployments.

Quality Considerations

Research demonstrates quantization's impact varies by precision level:

- 8-bit: Minimal quality loss (<2% perplexity increase)

- 4-bit: Moderate impact (2-8% quality degradation)

- 3-bit: Noticeable but acceptable loss (8-15%)

- 2-bit: Significant degradation (15-30% quality loss)

Important insight: Larger models handle quantization better. A quantized 13B model at 4-bit often outperforms an unquantized 7B model.

Platform-Specific Quantization Support

Understanding which platforms support which quantization methods is crucial for choosing the right deployment strategy:

| Engine | AWQ | GPTQ | GGUF | EXL2 | Focus / Notes |

|---|---|---|---|---|---|

| Ollama | No native | No native | Yes, native | No | User-focused tool using llama.cpp backend for local GGUF inference |

| llama.cpp | No native | No native | Yes, native | No | Core C/C++ inference engine that originated GGUF format, optimized for CPU with GPU offload |

| vLLM | Yes, native | Yes, native | Partial | No | Production GPU inference engine prioritizing throughput, native AWQ/GPTQ, plugin-based GGUF |

| LM Studio | Via llama.cpp | Via llama.cpp | Via llama.cpp | No | GUI application leveraging integrated backends (llama.cpp, AutoGPTQ) for quantization |

| TextGen-WebUI | Via AutoAWQ | Via AutoGPTQ | Via llama.cpp | Via ExLlamaV2 | Versatile web UI supporting multiple backend loaders for broad quantization and formats |

| KoboldCpp | No | No | Yes, native | No | Creative-generation client built on llama.cpp with native GGUF inference |

| ExLlamaV2 | No | No | No | Yes, native | GPU-optimized inference engine native to EXL2 format with fast GPTQ kernels |

| AutoGPTQ | N/A | Model creation only | No | No | Quantization library/tool for generating GPTQ checkpoints, not an inference engine |

Platform support matrix for different LLM quantization methods

Ollama: GGUF Specialist

Ollama focuses exclusively on GGUF format, providing a streamlined experience for local deployment:

- Unified ecosystem: All models use GGUF format

- Simple commands: ollama pull handles model management automatically

- Optimized performance: Deep llama.cpp integration

Target users: Developers building local AI applications

vLLM: Production Powerhouse

vLLM targets high-performance server deployments with GPU-optimized quantization:

- GPTQ support: Optimized kernels for fast inference

- AWQ emphasis: Recommended for production deployments

- API compatibility: OpenAI-compatible endpoints

Target users: Production teams, cloud deployments

Text-Generation-WebUI: Universal Platform

Text-Generation-WebUI supports virtually all quantization methods, making it ideal for experimentation:

- Complete format support: GPTQ, AWQ, GGUF, EXL2

- Backend flexibility: Switch between quantization methods easily

- Advanced features: Custom sampling, multiple backends

Target users: Researchers, developers, enthusiasts

LM Studio: Consumer-Friendly GUI

LM Studio provides polished GGUF model management with visual interfaces:

- Model discovery: Built-in browser with compatibility indicators

- Hardware optimization: Automatic quantization recommendations

- Visual management: No command-line complexity

Target users: Non-technical users, GUI preferences

When to Use Quantization

Ideal Scenarios for Quantization

- Hardware Constraints: When your GPU has limited VRAM or you're deploying on consumer hardware

- Cost Optimization: Cloud deployments benefit from reduced memory requirements and faster inference

- Edge Computing: Mobile devices and embedded systems where efficiency is critical

- Batch Processing: High-throughput scenarios where processing many requests simultaneously matters more than individual latency

When to Avoid Quantization

- Critical Accuracy Applications: Medical diagnosis, financial modeling, or safety-critical systems where any quality loss is unacceptable

- Abundant Resources: When you have sufficient VRAM and computational power for full-precision models

- Research and Development: During model development where maintaining full precision aids debugging and analysis

Quantization vs Alternative Optimization Techniques

Quantization vs Distillation

| Aspect | Quantization | Distillation |

|---|---|---|

| Approach | Reduces numerical precision | Creates smaller student model |

| Training | Minimal/no retraining | Significant training required |

| Architecture | Preserves original structure | Different, optimized architecture |

| Implementation | Straightforward | Complex teacher-student setup |

| Performance | Predictable quality trade-offs | Variable, can exceed expectations |

Bottom line: Quantization offers faster deployment with predictable results, while distillation provides more customization but requires substantial resources.

Quantization vs Pruning

| Feature | Quantization | Pruning |

|---|---|---|

| Method | Reduces precision | Removes model components |

| Hardware Support | Broad compatibility | Limited sparse operation support |

| Implementation | Standard framework support | Requires specialized libraries |

| Effectiveness | Consistent results | Variable, architecture-dependent |

Recommendation: Quantization is more accessible and widely supported, making it the preferred choice for most deployment scenarios.

Hybrid Approaches: Combining Quantization with Pruning and Distillation

For maximum efficiency, combine techniques:

- Distill → Quantize → Prune: E.g., Distill a 70B model to 13B, quantize to 4-bit, prune 20% sparsity—yields 85% size reduction with <5% accuracy loss.

- Tools: NVIDIA NeMo for pruning + distillation; SparseML for quantized pruning.

- Advantages: Synergistic gains; e.g., quantized pruned models run 3-5x faster.

- Example: A 175B LLM distilled to 30B, quantized to 8-bit, pruned—fits on consumer GPUs.

- Best For: Production where size/speed are critical.

Potential Disadvantages and Limitations

Quality Degradation Risks

- Extreme quantization (2-3 bit) can cause significant performance drops

- Task sensitivity: Mathematical reasoning and complex logic tasks may suffer more

- Model-specific impacts: Smaller models are more sensitive to quantization effects

Hardware Compatibility Challenges

- CPU limitations: Older processors may lack efficient low-precision operations

- Framework dependencies: Different quantization formats require specific software support

- Memory bandwidth: Benefits may be limited on bandwidth-constrained systems

Implementation Complexity

- Calibration requirements: Some methods need representative datasets for optimal results

- Debugging difficulties: Quantization artifacts can be challenging to diagnose

- Cross-platform compatibility: Format conversions may introduce additional quality loss

Security and Robustness Risks

- Adversarial Vulnerabilities: Quantized models are more susceptible to attacks; e.g., bit-flip or prompt manipulations can exploit precision loss, leading to harmful outputs.

- Data Leakage: Low-precision can enable model inversion attacks, reconstructing training data.

- Safety Metrics: Use tools like Q-resafe to assess Attack Success Rate (ASR); quantized LLMs may have 10-20% higher ASR.

- Mitigation: Test with adversarial robustness libraries; avoid extreme quantization (<3-bit) for sensitive apps.

- Example: Quantization attacks can hide backdoors in benign-looking models.

Hardware Requirements for Quantized Models

GPU Recommendations by Model Size

7B-8B Parameter Models:

- Q8_0: 16GB VRAM (RTX 4090, RTX 3090)

- Q4_K_M: 8GB VRAM (RTX 4070, RTX 3080)

- Q3_K_S: 6GB VRAM (RTX 4060, RTX 3060)

13B Parameter Models:

- Q8_0: 24GB VRAM (RTX 4090, RTX 3090 Ti)

- Q4_K_M: 12GB VRAM (RTX 4070 Ti)

- Q3_K_S: 8GB VRAM (RTX 4060 Ti)

System Requirements

- System RAM: 32GB minimum, 64GB recommended for larger models

- Storage: NVMe SSD recommended for fast model loading

- CPU: Modern multi-core processor for hybrid deployments

Choosing the Right Quantization Approach

Decision Framework

- Assess Hardware: Evaluate available VRAM, compute capabilities, and deployment environment

- Define Quality Requirements: Determine acceptable accuracy trade-offs for your use case

- Consider Performance Needs: Balance latency vs throughput requirements

- Evaluate Technical Resources: Assess implementation complexity and maintenance requirements

Method Selection Guide

Choose GPTQ when:

- Deploying on GPU infrastructure with adequate VRAM

- Need balanced speed and accuracy for production systems

- Have calibration data available for optimization

- Working with standard transformer architectures

Choose AWQ when:

- Maximum accuracy retention is critical

- Working with instruction-tuned or chat models

- GPU inference is the primary target

- Can invest in sophisticated calibration processes

Choose GGUF when:

- Targeting consumer hardware or resource-constrained environments

- Need broad platform compatibility and easy deployment

- Working with CPU or hybrid CPU-GPU configurations

- Want simple model management and distribution

Real-World Implementation Examples

Example 1: Local Development Setup

For developers building AI applications locally:

- Platform: Ollama or LM Studio

- Format: GGUF Q4_K_M or Q5_K_M

- Hardware: Consumer GPU with 8-12GB VRAM

- Trade-off: Slight quality reduction for local accessibility

Example 2: Production Deployment

For businesses deploying customer-facing AI services:

- Platform: vLLM or Text-Generation-WebUI

- Format: AWQ or GPTQ

- Hardware: Server GPUs with 16-24GB VRAM

- Trade-off: Minimal quality loss for improved throughput

Example 3: Edge Computing

For mobile or embedded applications:

- Platform: llama.cpp or custom implementation

- Format: GGUF Q3_K_S or Q4_K_M

- Hardware: ARM processors with limited RAM

- Trade-off: Acceptable quality reduction for deployment feasibility

Hands-On Quantization Implementation with BitsAndBytes

For practical application, use Hugging Face libraries for quick quantization. This example demonstrates 4-bit quantization of a Qwen3-8B model (25GB download size) with measured hardware utilization.

Complete Google Colab Example: 4-Bit Quantization with BitsAndBytes

Google Colab interface displaying hardware usage during Qwen3-8B 4-bit quantization

Cell 1: Install dependencies

!pip install -U bitsandbytesCell 2: Load quantized model

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

import torch

# Define model name

model_name = "Qwen/Qwen3-8B"

# Quantization config to load model in 4-bit with nf4 and float16 compute

quant_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch.float16

)

# Load the model with quantization

model = AutoModelForCausalLM.from_pretrained(

model_name,

quantization_config=quant_config,

device_map="auto"

)

# Load the tokenizer corresponding to the Qwen3-8B model

tokenizer = AutoTokenizer.from_pretrained(model_name)Cell 3: Generate text with the quantized model

# Prepare input text for inference

input_text = "Hello, world!"

inputs = tokenizer(input_text, return_tensors="pt").to(model.device)

# Generate text output using the quantized model

output_tokens = model.generate(

**inputs,

max_length=100, # limit maximum tokens generated

do_sample=True, # enable sampling for more diverse outputs

temperature=0.7, # controls randomness of predictions

top_p=0.9 # nucleus sampling to limit token choices

)

# Decode generated tokens to string

output_text = tokenizer.decode(output_tokens[0], skip_special_tokens=True)

print("Input:", input_text)

print("Generated output:", output_text)Sample Output

Input: Hello, world!

Generated output: Hello, world! I am a new user. Could you please help me with something? Of course! I'm here to help. How can I assist you today? Well, I need to find out if there's a way to get a free iPhone. I've heard about some programs that offer free phones, but I'm not sure how to go about it. Let me think... There are a few possibilities. One is government assistance programs, like Lifeline or the Affordable Connectivity Program in theHardware utilization during inference:

System RAM: 5.0 / 12.7 GB

GPU RAM: 8.1 / 15.0 GB

Disk: 64.3 / 112.6 GB

Download logs: Model downloaded ~16.3GB total (5 files).

Note: Add your Hugging Face token to Colab secrets as HF_TOKEN for private model access.

This example shows a 16GB model quantized to 4-bit, consuming only ~8GB GPU VRAM during inference instead of the full 16GB. The system RAM usage remains efficient for larger models with proper memory management.

Future of LLM Quantization

Emerging Innovations

- Mixed-precision quantization: Different precision levels for different model components

- Adaptive quantization: Dynamic adjustment based on input characteristics

- Hardware-specific optimization: Quantization tailored for specific accelerators

Industry Adoption Trends

Major cloud providers increasingly offer quantized model endpoints, while hardware manufacturers develop specialized acceleration for low-precision operations. This ecosystem development makes quantization more accessible and efficient.

Conclusion and Actionable Recommendations

LLM quantization transforms AI accessibility by reducing model sizes by 75-90% while maintaining impressive performance. Success depends on matching quantization methods to your specific requirements:

For Beginners:

Start with Ollama and GGUF Q4_K_M models for local experimentation with minimal setup complexity.

For Developers:

Use Text-Generation-WebUI to compare different quantization methods and identify optimal configurations for your applications.

For Production Teams:

Deploy AWQ or GPTQ models through vLLM for high-performance server inference with excellent accuracy retention.

For Resource-Constrained Deployments:

Leverage GGUF Q3_K_S models with llama.cpp for maximum compression while maintaining acceptable quality.

The quantization landscape continues evolving rapidly, but the fundamental principle remains constant: trading some precision for significant efficiency gains. By understanding your hardware constraints, quality requirements, and deployment scenarios, you can harness quantization to make powerful AI models accessible in previously impossible contexts.

Whether you're running a 7B model on a consumer laptop or deploying 70B models in production, quantization provides the key to efficient, cost-effective AI deployment. Start experimenting with the methods outlined in this guide to discover the optimal balance of performance, efficiency, and cost for your specific use case.

Ready to Get Started?

Check out our Interactive VRAM Calculator to determine exact memory requirements for your selected model, or explore our Complete GPU Performance Guide for hardware recommendations.

References

- GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers- Original research paper introducing GPTQ quantization method.

- AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration- Research paper detailing AWQ quantization approach.

- llama.cpp- Open-source library for LLM inference on CPUs, origin of GGUF format.

- AffineQuant: Affine Transformation Quantization for Large Language Models- Research paper by Yuexiao Ma et al. from Xiamen University and ByteDance Inc. introducing affine transformation-based quantization for efficient LLM deployment.

- Intel Distiller: Quantization Algorithms- Technical documentation on quantization algorithms and implementation strategies from Intel Labs.

Frequently Asked Questions

What is LLM quantization and why is it important?

LLM quantization is a compression technique that reduces the numerical precision of model weights from high-precision formats (like 32-bit floats) to lower-precision representations (like 8-bit or 4-bit integers). It's important because it can reduce model size by up to 75-90% while maintaining most of the performance, making powerful AI models accessible on consumer hardware and reducing deployment costs.

What are the main differences between GPTQ, AWQ, and GGUF quantization methods?

GPTQ is GPU-optimized with excellent performance (5x faster than GGUF), supporting 2-8 bit quantization. AWQ focuses on protecting important weights by analyzing activation patterns, offering superior accuracy retention (97-99%). GGUF is CPU-optimized with broad platform compatibility, making it ideal for consumer hardware and edge devices with simple deployment.

What are the differences between Post-Training Quantization (PTQ) and Quantization-Aware Training (QAT)?

PTQ applies quantization after a model is fully trained, offering fast deployment with no additional training but potentially higher accuracy loss at low bits. QAT integrates quantization during training/fine-tuning, providing better accuracy retention (up to 2-5% higher) at extreme compression levels but requiring more resources and time.

How much quality loss can I expect with different quantization levels?

Quality loss varies by precision level: 8-bit quantization typically shows minimal quality loss (<2% perplexity increase), 4-bit quantization has moderate impact (2-8% quality degradation), 3-bit quantization shows noticeable but acceptable loss (8-15%), and 2-bit quantization can result in significant degradation (15-30% quality loss). Larger models generally handle quantization better than smaller ones.

Which quantization method should I use for my specific use case?

Choose GPTQ for GPU deployments and production systems needing balanced speed and accuracy. Select AWQ when maximum accuracy retention is critical, especially for instruction-tuned models. Opt for GGUF when targeting consumer hardware, needing broad platform compatibility, or working with CPU/hybrid configurations. Consider your hardware constraints, quality requirements, and technical resources when making your decision.

Can quantized models run on any hardware, or are there specific requirements?

Quantized models have specific hardware requirements. For 7-8B parameter models: Q8_0 requires 16GB VRAM (RTX 4090, RTX 3090), Q4_K_M needs 8GB VRAM (RTX 4070, RTX 3080), and Q3_K_S requires 6GB VRAM (RTX 4060, RTX 3060). System requirements include 32GB+ RAM, NVMe SSD for fast loading, and modern multi-core processors for hybrid deployments.

How does quantization compare to other model optimization techniques like distillation?

Quantization reduces numerical precision with minimal retraining, preserving the original architecture and offering straightforward implementation. Distillation creates smaller student models through significant training, resulting in different architectures with more customization potential but requiring substantial resources. Quantization provides predictable quality trade-offs and faster deployment, while distillation can exceed expectations but with greater complexity.

What are the environmental and cost impacts of quantization?

Quantization can cut inference energy by 45-80% and reduce CO2 emissions, with post-quantization models showing 40% lower carbon equivalent per inference. Cost-wise, it saves 30-50% on cloud bills by enabling cheaper hardware (8GB VRAM vs 24GB). UNESCO reports up to 90% energy reduction with optimizations, aligning with green AI initiatives and making large-scale deployments more sustainable.