VRAM Calculator for Local Open Source LLMs - Accurate Memory Requirements 2025

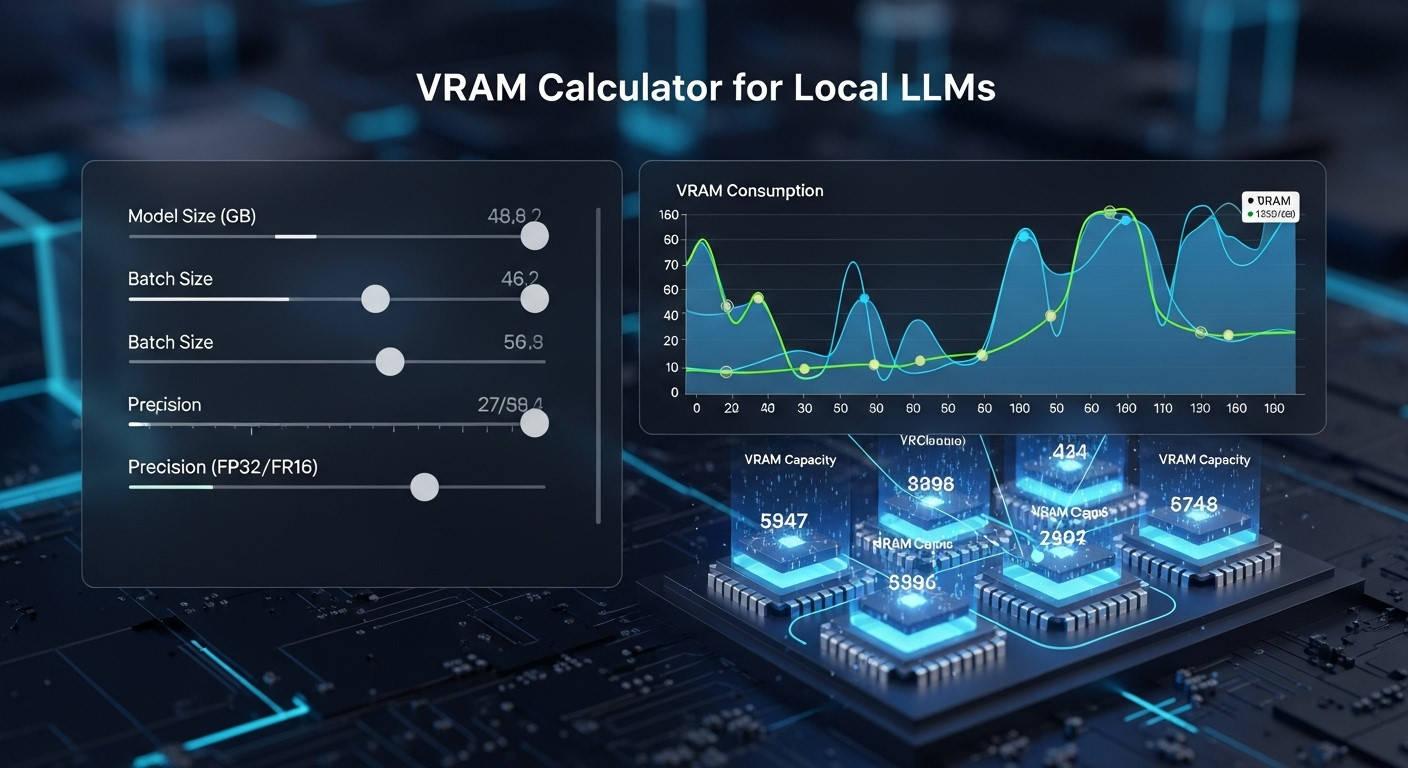

Understanding VRAM Requirements for Local LLMs

When running local LLMs, VRAM (Video Random Access Memory) is the most critical hardware constraint. Unlike traditional applications, large language models require substantial GPU memory to store model weights, intermediate calculations, and the KV cache that grows with conversation length.

Our VRAM calculator helps you determine the exact memory requirements for popular open source models from leading AI labs and communities. Whether you're running the latest reasoning models or proven workhorses, accurate VRAM estimation ensures smooth local deployment:

- Latest 2025 Models: Llama 4 (8B, 70B), DeepSeek-R1 (1.5B-671B including MoE), Qwen3 (4B-235B including MoE), Gemma 3 (27B), Phi-4 (14B), QwQ (32B)

- Llama Family: Llama 3.1 and 3.2 series (1B, 3B, 8B, 70B, 405B), Code Llama specialized variants

- Qwen Series: Qwen 2.5 (0.5B-72B), Qwen2 (0.5B-72B), Qwen 3 (0.6-235B), QwQ reasoning models

- Mistral & Mixtral: Mistral 7B, 22B and Mixtral MoE (8x7B, 8x22B) architectures

- Gemma Models: Gemma 2 (2B, 9B, 27B) and Gemma 3 series from Google

- Reasoning Models: DeepSeek-R1, QwQ-32B, and NVIDIA Nemotron models

- Specialized Models: Code-focused, instruction-tuned, and domain-specific variants

The calculator supports all model sizes from 0.5B to any number of parameters, with accurate estimates for both dense models and mixture-of-experts (MoE) architectures across different quantization formats.

GPU Recommendations by Model Size

Based on our comprehensive 2025 GPU benchmarks, here are the top recommendations for different model sizes. Performance data comes from real-world testing with Qwen 3 8B Q4_K_M quantization and other popular models with Ollama and LM Studio.

Small Models (1B-8B Parameters)

Perfect for personal use and development. These models require 4-8GB VRAM with Q4 quantization.

- Best Value: Intel Arc B580 (12GB) - $249, delivers 62 tokens/sec at just $4.02 per token/sec

- Recommended: RTX 4060 Ti (16GB) - $499, provides 89 tokens/sec with excellent context headroom

- Flagship: RTX 5090 (32GB) - $1999, achieves 213 tokens/sec for maximum performance

Medium Models (13B-32B Parameters)

Professional-grade models requiring 15-20GB VRAM. The RTX 5090 delivers 61 tokens/sec on 32B models.

- Budget: Used RTX 3090 (24GB) - $800-900, solid performance with 24GB capacity

- Current Gen: RTX 4090 (24GB) - $1600, reliable 128 tokens/sec on 8B models

- Latest: RTX 5090 (32GB) - $1999, handles larger contexts with GDDR7 bandwidth

Large Models (65B-70B+ Parameters)

Enterprise-grade models requiring 40-80GB VRAM for optimal performance.

- Professional: RTX A6000 (48GB) - $4000, reliable for 70B models

- Enterprise: H100 (80GB) - $25000+, delivers 144 tokens/sec with maximum capacity

- Next-Gen: B200 (128GB) - Enterprise pricing, 450 tokens/sec for cutting-edge performance

📊 For detailed benchmarks, price-performance analysis, and MoE model recommendations, read our complete Best GPUs for Local LLM Inference 2025 guide.

How Does Local LLM VRAM Calculation Work?

This calculator uses a precise empirical formula derived from real-world testing with llama.cpp backend. VRAM usage consists of two distinct components:

1. Fixed Cost (Constant)

- Model Weights: The quantized parameters stored in GPU memory

- CUDA Overhead: ~0.55 GB for cuBLAS buffers and workspace

- Scratchpad Memory: ~0.08 × Parameters for temporary tensors and activations

2. Variable Cost (Linear with Context)

- KV Cache: Grows linearly with every token (input + output)

- Context Impact: Long conversations can exceed model weight memory

Key Insight: KV Cache Impact

The KV cache, not model size, often becomes the limiting factor for long contexts. Understanding this helps optimize your hardware choices and usage patterns.

Precise VRAM Formula for Local LLMs

Our calculator uses the following empirically-validated formula:

Where:

- P: Parameters in billions

- b_w: Bytes per weight (depends on quantization, e.g., ~0.57 for Q4_K_M)

- B: Batch size (number of concurrent requests)

- N: Context length in tokens

- L: Transformer layers

- d: Hidden dimension

- g: GQA grouping factor (n_head / n_kv_head, e.g., 32 ÷ 8 = 4)

- b_kv: Bytes per KV scalar (e.g., 2 for FP16)

More VRAM Calculation Example

Example: Qwen3 8B with Q4_K_M Quantization

- Model Weights: 8.0 × 0.57 ≈ 4.56 GB

- CUDA Overhead: 0.55 GB

- Scratchpad: 8.0 × 0.08 ≈ 0.64 GB

- Base Total: ~5.75 GB

- At 2K context: +0.03 GB KV cache = ~5.78 GB total

- At 8K context: +0.12 GB KV cache = ~5.87 GB total

- At 32K context: +0.47 GB KV cache = ~6.22 GB total

💡 Tip: With Q4_K_M quantization, Qwen3 8B fits comfortably on an 8GB GPU (RTX 4060) with reasonable context lengths, making it an excellent choice for local deployment.

Quantization Impact on VRAM Usage

Quantization reduces model size by using fewer bits per parameter, dramatically impacting VRAM requirements. Understanding these trade-offs is crucial for optimizing your local LLM deployment:

Quantization Formats Comparison:

- Q4_K_M (4-bit): 0.57 bytes/weight - Best balance of size and quality, recommended for most users

- Q5_K_M (5-bit): 0.68 bytes/weight - Improved quality with minimal size increase

- Q6_K (6-bit): 0.78 bytes/weight - Near-original quality, good for quality-sensitive tasks

- Q8_0 (8-bit): 1.0 bytes/weight - Minimal quality loss, excellent for production use

- BF16 (bfloat16): 2.0 bytes/weight - Training format for many modern models, good stability

- FP16 (float16): 2.0 bytes/weight - Standard half-precision, common inference format

- FP32 (float32): 4.0 bytes/weight - Full precision, rarely needed for inference

💡 Quick Guide: Start with Q4_K_M for optimal VRAM efficiency. Upgrade to Q5_K_M or Q6_K if you notice quality issues. Use FP16/BF16 only when you have abundant VRAM (24GB+) and need maximum quality -learn more about quantization methods.

For most applications, Q4_K_M provides the optimal balance between model size and output quality. Consider higher quantization levels only when you have abundant VRAM or require maximum quality.

Optimizing VRAM Usage for Local LLMs

Beyond choosing the right hardware, several optimization strategies can help you maximize your VRAM efficiency:

Context Length Management

The KV cache grows linearly with context length and can quickly exceed model weight memory. For long conversations:

- Monitor context usage and implement sliding window techniques

- Use context compression for maintaining conversation history

- Consider models with longer native context windows (Qwen 3 supports 256K tokens)

Inference Engine Selection

Different inference engines have varying VRAM efficiency. Popular options include:

- llama.cpp: Excellent VRAM efficiency, supports all quantization formats

- vLLM: Optimized for throughput, PagedAttention reduces memory fragmentation

- Ollama: User-friendly with automatic memory management

- Text Generation WebUI: Feature-rich with extensive optimization options

For detailed comparisons of local LLM tools, check our comprehensive guide toOllama alternatives.

Common VRAM Optimization Mistakes to Avoid

Based on our experience helping users optimize their local LLM deployments, here are the most common mistakes and how to avoid them:

1. Ignoring KV Cache Growth

Many users focus only on model size and forget that the KV cache grows with every token. For long conversations, the cache can consume more memory than the model itself.

❌ Wrong: "I have 8GB VRAM, so I can run any 8B model indefinitely."

✅ Correct: "I have 8GB VRAM, so I can run an 8B model with up to ~4K context length comfortably."

2. Overestimating MoE Model Efficiency

Mixture of Experts (MoE) models like Mixtral require loading ALL expert parameters into VRAM, not just the active ones.

❌ Wrong: "Mixtral 8x7B only uses 7B parameters at a time, so it needs the same VRAM as a 7B model."

✅ Correct: "Mixtral 8x7B loads all 47B parameters into VRAM, requiring similar memory to a dense 47B model."

3. Choosing Wrong Quantization

Using unnecessarily high precision quantization wastes VRAM without meaningful quality improvements for most use cases.

❌ Wrong: "I'll use F16 for maximum quality even though I have limited VRAM."

✅ Correct: "I'll use Q4_K_M for the best balance of quality and memory efficiency."

Future-Proofing Your Local LLM Setup

When planning your local LLM hardware investment, consider these emerging trends and technologies:

Emerging Model Architectures

- Mamba/State Space Models: Linear scaling with sequence length instead of quadratic

- Mixture of Depths: Dynamic computation allocation for efficiency

- Speculative Decoding: Using smaller models to accelerate larger ones

Hardware Trends

- Higher VRAM Capacities: RTX 5090 with 32GB, future cards with 48GB+

- Faster Memory: GDDR7 providing higher bandwidth for large models

- Specialized AI Hardware: NPUs and dedicated inference accelerators

Software Optimizations

- Better Quantization: New formats like GGUF improvements and custom quantization

- Memory Management: Advanced KV cache compression and paging

- Multi-GPU Support: Improved model parallelism for consumer hardware

💡 Pro Tip: Investment Strategy

When choosing hardware, prioritize VRAM capacity over raw compute power. A GPU with more VRAM will remain useful longer as models grow in size and context requirements increase. Consider the RTX 4060 Ti 16GB or RTX 5090 32GB for future-proofing.

Frequently Asked Questions

How accurate is this VRAM calculator?

Our calculator uses empirically-tested formulas derived from real-world measurements with llama.cpp. The accuracy is typically within 5% of actual VRAM usage, making it reliable for hardware planning.

Can I run larger models by using system RAM?

Yes, but performance will be severely impacted. GPU-to-RAM transfers are much slower than VRAM access. This approach is only viable for occasional use or when inference speed isn't critical.

Do MoE models like Mixtral require loading all parameters?

Yes, despite being 'mixture of experts,' current implementations load all expert parameters into VRAM. A 8x22B Mixtral model requires similar VRAM to a dense 141B model, not just the active expert subset.

What's the difference between this and online VRAM calculators?

Our calculator uses the latest empirical formula that accounts for GQA (Grouped Query Attention), specific model architectures, and real-world overhead measurements. Many online calculators use simplified estimates that can be significantly inaccurate.

Which quantization format should I choose?

Q4_K_M provides the best balance between model size and quality for most use cases. Use Q5_K_M or Q6_K if you have extra VRAM and want better quality. F16 is only recommended if you have abundant VRAM and need maximum quality.

How does context length affect VRAM usage?

Context length has a linear impact on VRAM through the KV cache. Each 1,000 tokens typically consume ~0.11GB additional VRAM for a 7B model. Long conversations can quickly exceed model weight memory usage.

🚀 Ready to Start Your Local LLM Journey?

Now that you understand VRAM requirements, explore our comprehensive guides to get started with local LLMs:

Note: This formula calculates the fixed memory cost to load a model before accounting for context length (KV Cache). For MoE models like Mixtral, all parameters must be loaded into memory. Actual usage may vary by inference engine and specific optimizations.