Best Local LLMs for 24GB VRAM: Performance Analysis 2026

With 24GB of VRAM, you're in the sweet spot. Q4 quantized models in the 27B-40B parameter range deliver serious reasoning power, multimodal capabilities, and coding skills that compete with commercial AI offerings, all running locally on your hardware. But here's the catch: a model dominating MMLU-Pro benchmarks might produce broken code in real projects. Another with a stellar Math Index could hit a "context cliff" at 48K tokens, grinding to a halt when you need it most. And benchmark scores won't tell you which model can autonomously build a working game from a single prompt. New to local LLMs? Start with our complete introduction to local LLMs to understand the basics.

This guide takes a different approach: we combined three independent validation methods to find what actually works on 24GB VRAM systems. First, we analyzed industry-standard benchmarks (Intelligence Index, GPQA, MMLU-Pro, LiveCodeBench) to establish baseline capabilities across reasoning, coding, and mathematics. Second, we profiled hardware performance metrics on a NVIDIA L4 24GB VRAM system with Ollama, measuring real VRAM consumption, generation speed degradation across context windows (16K to 48K), and the critical "context cliff" phenomenon where models offload to system RAM and performance collapses. Third, we designed an agentic coding challenge using the Cline VS Code extension: build a high-quality Flappy Bird clone with procedural graphics, giving each model 5 turns to fix errors autonomously. These real tests reveal which models can translate vague creative requirements into a functional vibe coded project without much hand holding.

The verdict? GLM-4.7-Flash (Reasoning) dominates with a 30.1 Intelligence Index and won the agentic coding challenge by building a procedural rendering engine with particle effects, the only model to truly deliver "high quality graphics." For long-context stability, Qwen3 30B maintains 33-53 t/s performance at 48K tokens with zero offloading, while GLM and Nemotron hit context cliffs. NVIDIA Nemotron 3 Nano 30B crushes mathematics with a 91.0 Math Index but shows higher overall memory usage with automatic CPU offloading by Ollama. Everything else involves serious trade-offs. Here's how we tested them and why it matters.

TL;DR: Best Local LLMs for 24GB VRAM in 2026

Top Recommendations:

- GLM-4.7-Flash (Reasoning): Best overall with 30.1 Intelligence Index, 25.9 Coding Index, and winner of the Flappy Bird agentic challenge

- Qwen3 30B A3B: Best for long-context reliability. Maintains 100% GPU utilization at 48K tokens with consistent 33-53 t/s performance

- NVIDIA Nemotron 3 Nano 30B A3B (Reasoning): Best for mathematics with exceptional 91.0 Math Index and 75.7 GPQA score

- Qwen3 VL 32B (Reasoning): Best multimodal model with native vision, 24.5 Intelligence Index, and highest MMLU-Pro (81.8)

- GPT-OSS 20B (High Context): Excellent alternative if you need to exceed 48k context and are willing to slightly compromise on intelligence. Stays comfortably within VRAM limits at much higher context lengths.

Quick Start: Download & Run

Ready to get started? Here are the Ollama commands to download and run our top recommendations:

| Model | Best For | Ollama Command | Ollama Library |

|---|---|---|---|

| GLM-4.7-Flash | Coding & General Use | ollama run glm-4.7-flash | View on Ollama |

| Qwen3 30B | Long Context Stability | ollama run qwen3:30b | View on Ollama |

| Nemotron 3 Nano | Mathematics | ollama run nemotron-3-nano | View on Ollama |

Note: These commands will download the Q4_K_M quantized versions by default, which are optimized for 24GB VRAM systems.

Benchmark Performance Analysis

The interactive chart below shows performance across industry-standard benchmarks. Select metrics to compare models directly.

AI Model Performance Comparison

| Model Name | Creator | Release Date | Artificial Analysis Intelligence Index | Artificial Analysis Coding Index | Artificial Analysis Math Index | MMLU-Pro (Reasoning & Knowledge) | GPQA Diamond (Scientific Reasoning) | Humanity's Last Exam (HLLE) | LiveCodeBench (Coding) | SciCode (Bench Sci) | Math 500 | AIME 2025 (Competition Math) | AIME 25 | IFBench | LCR | TerminalBench Hard | TAU2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GLM-4.7-Flash (Reasoning) | Z AI | 2026-01-19 | 30.1% | 25.9% | N/A | N/A | 58.1% | 7.1% | N/A | 33.7% | N/A | N/A | N/A | 60.8% | 35.0% | 22.0% | 98.8% |

| Qwen3 VL 32B (Reasoning) | Alibaba | 2025-10-21 | 24.5% | 14.5% | 84.7% | 81.8% | 73.3% | 9.6% | 73.8% | 28.5% | N/A | N/A | 84.7% | 59.4% | 55.3% | 7.6% | 45.6% |

| NVIDIA Nemotron 3 Nano 30B A3B (Reasoning) | NVIDIA | 2025-12-15 | 24.3% | 19.0% | 91.0% | 79.4% | 75.7% | 10.2% | 74.1% | 29.6% | N/A | N/A | 91.0% | 71.1% | 33.7% | 13.6% | 40.9% |

| Qwen3 30B A3B 2507 (Reasoning) | Alibaba | 2025-07-30 | 22.4% | 14.7% | 56.3% | 80.5% | 70.7% | 9.8% | 70.7% | 33.3% | 97.6% | 90.7% | 56.3% | 50.7% | 59.0% | 5.3% | 28.1% |

| GLM-4.7-Flash (Non-reasoning) | Z AI | 2026-01-19 | 21.5% | 11.0% | N/A | N/A | 45.2% | 4.9% | N/A | 25.5% | N/A | N/A | N/A | 46.3% | 14.7% | 3.8% | 91.8% |

| Qwen3 Coder 30B A3B Instruct | Alibaba | 2025-07-31 | 20.0% | 19.4% | 29.0% | 70.6% | 51.6% | 4.0% | 40.3% | 27.8% | 89.3% | 29.7% | 29.0% | 32.7% | 29.0% | 15.2% | 34.5% |

| Qwen3 VL 30B A3B (Reasoning) | Alibaba | 2025-10-03 | 19.6% | 13.1% | 82.3% | 80.7% | 72.0% | 8.7% | 69.7% | 28.8% | N/A | N/A | 82.3% | 45.1% | 40.7% | 5.3% | 19.9% |

| Olmo 3 32B Think | Allen Institute for AI | 2025-11-20 | 18.9% | 10.5% | 73.7% | 75.9% | 61.0% | 5.9% | 67.2% | 28.6% | N/A | N/A | 73.7% | 49.1% | 0.0% | 1.5% | 0.0% |

| DeepSeek R1 Distill Qwen 32B | DeepSeek | 2025-01-20 | 17.2% | N/A | 63.0% | 73.9% | 61.5% | 5.5% | 27.0% | 37.6% | 94.1% | 68.7% | 63.0% | 22.9% | 9.7% | N/A | N/A |

| Qwen3 Omni 30B A3B (Reasoning) | Alibaba | 2025-09-22 | 15.6% | 12.7% | 74.0% | 79.2% | 72.6% | 7.3% | 67.9% | 30.6% | N/A | N/A | 74.0% | 43.4% | 0.0% | 3.8% | 21.3% |

| Qwen3 30B A3B (Reasoning) | Alibaba | 2025-04-28 | 15.3% | 11.0% | 72.3% | 77.7% | 61.6% | 6.6% | 50.6% | 28.5% | 95.9% | 75.3% | 72.3% | 41.5% | 0.0% | 2.3% | 26.0% |

| NVIDIA Nemotron 3 Nano 30B A3B (Non-reasoning) | NVIDIA | 2025-12-15 | 13.3% | 15.8% | 13.3% | 57.9% | 39.9% | 4.6% | 36.0% | 23.0% | N/A | N/A | 13.3% | 37.5% | 6.7% | 12.1% | 25.4% |

| Gemma 3 27B Instruct | 2025-03-12 | 10.2% | 9.6% | 20.7% | 66.9% | 42.8% | 4.7% | 13.7% | 21.2% | 88.3% | 25.3% | 20.7% | 31.8% | 5.7% | 3.8% | 10.5% |

Key Benchmark Insights

General Intelligence and Reasoning Tasks

For broad intellectual work, GLM-4.7-Flash leads in reasoning mode with a 30.1 Intelligence Index. As a 30B-A3B MoE model, our practical agentic testing confirms this isn't just a number—it was the only model to autonomously build a high-quality game. The real contenders in the 30B+ range show NVIDIA's Nemotron 3 Nano 30B (24.3) and Qwen3 VL 32B (24.5) trading blows at the top. What's particularly impressive is Nemotron's 91% Math 500 score—the highest in this dataset, suggesting exceptional mathematical reasoning capabilities. For users prioritizing pure problem-solving ability, Nemotron represents the sweet spot of size and performance, though practical tests reveal it requires careful VRAM management at high context, at least when running it with ollama.

The Qwen3 30B A3B 2507 variant deserves mention here with its strong 90.7 AIME score, indicating robust competition-level math performance. However, its lower overall intelligence index (22.4) suggests it may sacrifice breadth for depth in specific domains.

Coding and Development

GLM-4.7-Flash (Reasoning) dominates the coding benchmarks with a 25.9 Coding Index, significantly ahead of any 30B model in this comparison. This is remarkable given its efficient architecture, suggesting highly efficient training focused on code generation. In our practical hardware tests, the model delivered solid inference speeds of 42.47 tokens/second at standard context lengths on NVIDIA L4 GPU, though users should note significant performance dips at very high context loads (see Practical Performance below).

Among larger models, Qwen3 Coder 30B A3B Instruct leads with a 19.4 Coding Index, edging out NVIDIA Nemotron 3 Nano 30B's 19.0. The Coder variant's specialization shows in its 40.3% LiveCodeBench score, the highest among 30B models, indicating strong performance on practical coding tasks. For developers who need the additional context window and capabilities of a 30B model, the Qwen3 Coder represents the best choice, offering superior stability in our hardware tests, while GLM-4.7-Flash offers superior performance-per-VRAM-GB.

Multimodal and Vision Tasks

For vision-language work, Qwen3 VL 32B stands alone in this comparison with genuine multimodal capabilities. Its 84.7% Math performance combined with vision processing makes it uniquely positioned for tasks involving diagrams, charts, or visual problem-solving. The reasoning variant maintains competitive general scores while adding this dimension, though users should note the slower 85 tokens/second output rate. Qwen3 VL 30B A3B offers a slightly lighter alternative with 82.3% Math performance while staying within typical 24GB constraints.

The Bottom Line

GLM-4.7-Flash offers the best coding performance and agentic capability while leaving plenty of VRAM headroom, NVIDIA Nemotron 3 Nano 30B excels at mathematical reasoning, and Qwen3's specialized variants (Coder, VL) deliver targeted capabilities for development or multimodal work respectively, with the Qwen3 30B A3B showing the best high-context stability in our practical tests.

Practical Performance Testing: Where Theory Meets Hardware

Benchmarks are useful, but they don't tell you how a model feels when you're editing a 4,000-line file or summarizing a 50-page PDF. To find the truth, we standardized two practical workloads: Large Context Summarization (measuring performance degradation as context fills) and Agentic Coding (testing complex instruction following and autonomy).

Hardware Benchmarks: The Context Cliff

We performed these tests by asking models to summarize actual blog posts from this website on an NVIDIA L4 (24GB VRAM) system. We used official Q4_K_M quantized versions from the Ollama library and ensured at least 80% of the configured context was filled with actual input text. This mimics real-world usage, unlike synthetic benchmarks that often test high context settings with empty prompts to produce inflated TPS numbers.

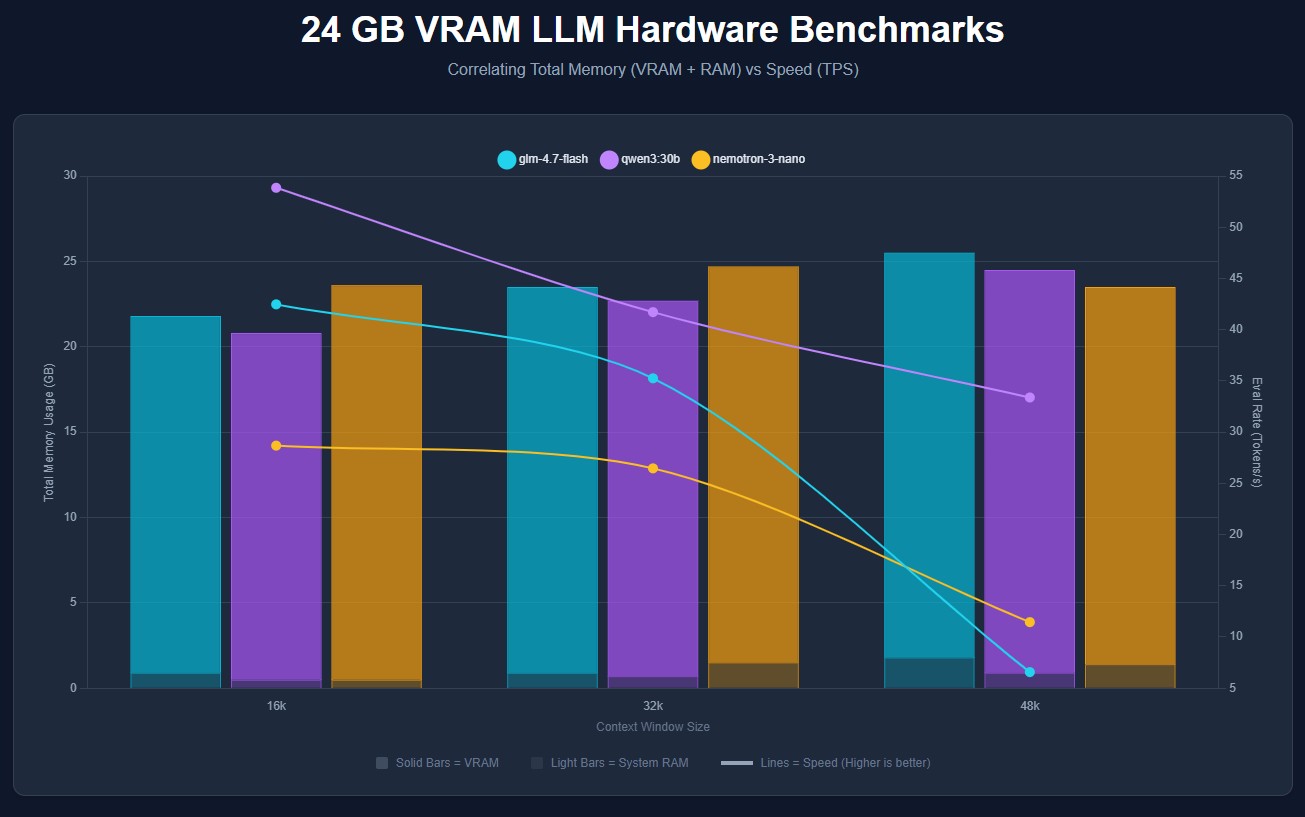

Key Finding: Qwen3 30B is the stability king. While GLM-4.7-Flash starts strong, it hits a "context cliff" at 48k tokens, dropping to 6.57 t/s as it offloads to system RAM. Qwen3 30B maintains a usable 33.38 t/s even at 48k context, keeping 100% of the workload on the GPU. NVIDIA Nemotron shows comparatively higher overall memory usage (VRAM + RAM combined), and Ollama automatically offloads some layers to CPU even at low context windows despite having sufficient total memory to fit the entire model in VRAM, resulting in slower performance across all context sizes.

Practical Insight: In our testing, speeds around 40 tokens/second felt responsive enough for agentic coding workflows, even with the added overhead of reasoning models. As long as models stay fully on the GPU (avoiding offloading), they remain viable for interactive tasks such as agentic coding, vibe coding, live chatting, document analysis etc.

Note: RAM values in the table below reflect only the memory consumed by the model inference process. We have already deducted the baseline system RAM usage to show the actual overhead from running the models.

| Context | Model | VRAM (GB) | RAM (GB) | Eval Rate (t/s) | Hardware Utilization |

|---|---|---|---|---|---|

| 16k | glm-4.7-flash | 20.9 | 0.9 | 42.47 | 100% GPU |

| 32k | glm-4.7-flash | 22.6 | 0.9 | 35.25 | 100% GPU |

| 48k | glm-4.7-flash | 23.7 | 1.8 | 6.57 | 5% CPU / 95% GPU |

| 16k | qwen3:30b | 20.3 | 0.5 | 53.85 | 100% GPU |

| 32k | qwen3:30b | 22.0 | 0.7 | 41.71 | 100% GPU |

| 48k | qwen3:30b | 23.6 | 0.9 | 33.38 | 100% GPU |

| 16k | nemotron-3-nano | 23.1 | 0.5 | 28.68 | 10%/90% mix |

| 32k | nemotron-3-nano | 23.2 | 1.5 | 26.46 | 10%/90% mix |

| 48k | nemotron-3-nano | 22.1 | 1.4 | 11.45 | 14%/86% mix |

24 GB VRAM LLM Hardware Benchmarks: GLM 4.7 Flash vs Nemotron 3 Nano vs Qwen 3 30B A3B

Agentic Coding Capabilities: The Flappy Bird Challenge

Benchmarks often fail to capture "vibe coding" - the ability of a model to iteratively build a complex creative project without much technical oversight by the user. To assess this, we conducted a rigorous Agentic Coding Challenge using the Cline extension within Cursor IDE.

Test Methodology

- Environment: Models ran via Ollama (official library versions) connected to Cline in Cursor IDE.

- Context Settings: Context window set to 48k tokens. The "Use compact prompt" setting was disabled to ensure models received the full system instruction set.

- Workflow:

- Started in Plan Mode: The model's initial plan was approved without modification.

- Switched to Act Mode: The model executed the coding tasks.

- Iteration: We provided up to 5 feedback loops, feeding back any runtime errors, visual glitches, or gameplay issues to give the model a chance to self-correct.

The Prompt: "Create a flappy birds clone with high quality graphics. Use HTML, CSS, and JavaScript for the frontend UI, and a very basic Python backend using only the standard library. No frameworks. No db. Work in the same dir."

Winner: GLM-4.7-Flash

GLM-4.7-Flash didn't just write code; it understood the assignment. While other models drew rectangles, GLM-4.7 built a procedural rendering engine using HTML5 Canvas to generate gradients, clouds, and particle effects for trails and collisions. It implemented a polished start screen, high score tracking via localStorage, and a "glassmorphism" UI design.

Verdict: The only model to produce a truly "high quality" and fully playable result. Controls were slightly jerky, but the visuals and feature set were leagues ahead of the competition.

Model Comparison

| Feature | GLM-4.7-Flash | Nemotron-3-Nano | Qwen3-30B |

|---|---|---|---|

| Visuals | Excellent. Procedural gradients, particle effects, parallax clouds. | Poor. Hallucinated SVG data URIs (broken images), basic rectangles. | Poor. Simple yellow circle and solid green rectangles. |

| Gameplay | Complete. Smooth physics (despite jerky controls), scoring, restart, input handling. | Basic. Functional but lacks tailored start/restart mechanics. | Unplayable. Critical loop logic bug created a solid wall of pipes. |

| Code Quality | High. Modular, advanced server threading, robust state management. | Medium. Minimalist. Performance antipatterns (creating images in render loop). | Low. Fatal logic errors in game loop timing. |

Visual comparison: GLM-4.7-Flash (left) delivers procedural graphics with particle effects, while Nemotron and Qwen3 produce basic geometric shapes

Model Recommendations by Use Case

Best Overall & Coding: GLM-4.7-Flash (Reasoning)

When to choose: Development, agentic workflows, complex reasoning, and general assistance.

Key strengths:

- Dominant 30.1 Intelligence Index

- Winner of Flappy Bird Agentic Challenge

- Highest Coding Index (25.9)

- Strong 42.47 t/s generation speed (short context)

Best for Mathematical Reasoning: NVIDIA Nemotron 3 Nano 30B A3B (Reasoning)

When to choose: Pure math problems, scientific research, logic puzzles.

Key strengths:

- Unrivaled 91.0 Math Index

- Excellent 75.7 GPQA score

- Strong 24.3 Intelligence Index

Best Multimodal: Qwen3 VL 32B (Reasoning)

When to choose: Visual analysis, UI design coding, chart interpretation.

Key strengths:

- Best class MMLU-Pro (81.8)

- Native vision with strong reasoning

Best for Long Context Reliability: Qwen3 30B A3B

When to choose: Summarizing large documents, analyzing codebases >30k tokens.

Key strengths:

- Zero offloading at 48k context (100% GPU)

- Consistent 33-53 t/s performance

- No "context cliff" like GLM or Nemotron

*For contexts significantly larger than 48k, consider GPT-OSS 20B if you can accept a slight trade-off in reasoning capability.

Optimization Strategies for 24GB VRAM

Quantization Selection

Use Q4_K_M quantization for optimal balance between quality and VRAM usage. This reduces model size by ~75% with minimal quality loss, allowing 30B-32B models to fit comfortably in 24GB VRAM.

Beginner tip: Popular platforms like Ollama and LM Studio provide Q4 quantized models by default through their official libraries, so you can simply download and run without worrying about quantization settings. To learn more about how quantization works and other quantization formats, check out our complete guide to LLM quantization.

Context Window Management

Start with 8K-32K context windows and scale based on actual needs. Monitor VRAM usage and generation speed as you increase context length.

Need to adjust context settings? Check our guides on increasing context window in Ollama and increasing context window in LM Studio.

Reasoning vs Speed Trade-offs

Reasoning variants provide 40-80% better performance on complex tasks but may generate slower. Use reasoning variants as your default and switch to non-reasoning only for simple, speed-critical tasks. Note that most reasoning models allow you to toggle reasoning on/off per request, giving you flexibility without needing to switch models entirely.

Recommended GPUs for 24GB VRAM Models

To run the 30B-32B class models effectively, you need a GPU with 24GB of VRAM. Here are the best options currently available, ranging from consumer flagships to professional workstation cards.

| GPU Model | Type | VRAM | Best For |

|---|---|---|---|

| NVIDIA RTX 3090 / 3090 Ti | Consumer (Used) | 24GB GDDR6X | Best Value: Excellent performance per dollar on the used market. |

| NVIDIA RTX 4090 | Consumer (High-End) | 24GB GDDR6X | Best Performance: Fastest inference speeds for consumer hardware. |

| NVIDIA RTX 5090 | Consumer (Flagship) | 32GB GDDR7 | Future Proof: Massive 32GB buffer allows running larger quants or higher context. |

| NVIDIA A10G | Data Center | 24GB GDDR6 | Cloud Inference: Budget-friendly data center option for smaller models. |

| NVIDIA L4 | Data Center | 24GB GDDR6 | Cloud Inference: Efficient, stable card for server deployment with 24GB capacity. |

| NVIDIA RTX 6000 Ada | Workstation | 48GB GDDR6 | Pro Workflows: Double the VRAM for running 70B models or massive batches. |

| Mac Studio (M4 Max) | Apple Silicon | 36GB-128GB Unified | Mac Alternative: Configurable unified memory, slower inference than NVIDIA but good for development. |

| Mac Studio (M3 Ultra) | Apple Silicon | 64GB-192GB Unified | Mac Alternative: Massive memory capacity for very large models, up to 192GB available. |

Conclusion

With 24GB of VRAM, you're no longer just running chatbots. You're running capable reasoning engines. Our testing shows that while benchmark scores are useful, practical performance reveals the true character of these models. GLM-4.7-Flash is the clear winner for developers and creative thinkers who need a model that "gets it" and can build complex projects with minimal hand-holding. For production-grade reliability and massive context workflows, Qwen3 30B remains the unshakeable foundation that won't buckle under pressure.

Build your model mix strategically: Start with GLM-4.7-Flash as your daily driver for coding and creative work. Keep Qwen3 30B on standby for heavy-duty document processing and long-context stability. If your workflow involves visual analysis, UI mockup interpretation, or chart reading, add Qwen3 VL 32B to your arsenal. For mathematics-intensive research or scientific computing, NVIDIA Nemotron 3 Nano 30B delivers unmatched precision. And if you regularly work with contexts exceeding 48k tokens, GPT-OSS 20B offers a viable path forward without breaking your VRAM budget.

The 24GB VRAM tier isn't just about running bigger models. It's about running the right model for each task. With the insights from this guide, you can now make informed decisions that maximize both performance and productivity.

References

- Artificial Analysis - Benchmark scores for AIME 2025, LiveCodeBench, MMLU-Pro, GPQA, Math 500, IFBench, and Artificial Intelligence Index. Available at: https://artificialanalysis.ai/

Frequently Asked Questions

What is the best local LLM for 24GB VRAM in 2026?

Our tests reveal distinct winners by use case. GLM-4.7-Flash (Reasoning) is the best overall all-rounder, dominating with a 30.1 Intelligence Index and winning our agentic coding challenge. For production reliability and long context, Qwen3 30B offers unmatched stability with zero offloading at 48k tokens. For pure mathematics, NVIDIA Nemotron 3 Nano 30B leads with a 91.0 Math Index. Choose GLM-4.7-Flash for daily driving and Qwen3 30B for heavy lifting.

How do 24GB VRAM models compare to 16GB VRAM models?

The jump from 16GB to 24GB VRAM represents a shift from simple chat and automation workflows to practical agentic coding. While not matching the complexity of 70B+ models for long, multi-step tasks, 30B models like GLM-4.7-Flash excel at boilerplate generation, UI development, and simple autonomous coding tasks. They work brilliantly as execution models when paired with larger planning models, or standalone for straightforward agentic workflows. Think of it as graduating from a helpful assistant to a capable junior developer that can actually build things.

Should I choose reasoning or non-reasoning variants?

Reasoning variants significantly outperform non-reasoning versions on complex tasks. For example, NVIDIA Nemotron 3 Nano 30B A3B (Reasoning) achieves 24.3 intelligence index vs 13.3 for the non-reasoning variant, and EXAONE 4.0 32B (Reasoning) scores 16.6 vs 11.5. Choose reasoning variants for mathematical problem-solving, complex coding, logical analysis, and multi-step reasoning tasks. Use non-reasoning variants only when speed is critical and tasks are straightforward.

What are the best models for coding with 24GB VRAM?

GLM-4.7-Flash (Reasoning) is the undisputed coding champion with a 25.9 Coding Index and 30.1 Intelligence Index—it won our Flappy Bird agentic coding challenge by building a procedural rendering engine with particle effects. For specialized coding tasks, Qwen3 Coder 30B A3B Instruct offers a 19.4 Coding Index with strong LiveCodeBench performance (40.3%). NVIDIA Nemotron 3 Nano 30B follows with 19.0 Coding Index but excels more at mathematical reasoning. Start with GLM-4.7-Flash for daily coding and agentic workflows.

Are multimodal models worth the VRAM overhead on 24GB systems?

Yes, if your workflow involves visual content. Qwen3 VL 32B models provide native screenshot analysis, document understanding, and UI debugging capabilities. The reasoning variant achieves 24.5 intelligence index with 84.7 math index, making it competitive with text-only models while adding vision capabilities. The VRAM overhead is minimal on 24GB systems, making multimodal models practical for mixed text-vision workflows.

How do I optimize 24GB VRAM for maximum performance?

Key optimization strategies: (1) Use Q4_K_M quantization to fit larger models while maintaining quality, (2) Tune context windows to actual needs rather than maximizing available space, (3) Enable Flash Attention to reduce KV cache overhead, (4) Choose reasoning variants for complex tasks and non-reasoning for simple queries, (5) Start with conservative context settings (8K-32K) and scale only when your workflow demands it.