LM Studio Web Search MCP Integration: Complete Tutorial with Tavily and Brave Search

Are you looking to supercharge your local LLM in LM Studio with real-time web search capabilities? You've come to the right place! In this comprehensive guide, I'll walk you through setting up web search functionality in LM Studio using MCP (Model Context Protocol) servers, with detailed step-by-step tutorials for both GUI-based setup and OpenAI-compatible API integration using Tavily, plus insights into Brave Search as an alternative. I have also included step by step video totorials in the later sections.

What You'll Learn

- Understanding MCP fundamentals and why it transforms local LLMs

- LM Studio MCP support requirements and setup process

- Complete Tavily MCP integration with step-by-step instructions (GUI method)

- OpenAI-compatible API setup for developers using the /v1/responses endpoint with web search MCP

- Brave Search alternative configuration and comparison

- Troubleshooting common MCP issues and performance optimization

- Advanced MCP options and future developments

What is MCP and Why Should You Care?

Model Context Protocol (MCP) is an open standard that enables AI models to connect with external tools and data sources. Think of it as a bridge that allows your local LLM to access real-time information from the web, databases, APIs, and other services without compromising your privacy or requiring cloud-based solutions.

For local LLM enthusiasts, MCP represents a game-changer. Instead of being limited to the training data cutoff of your model, you can now:

- Perform real-time web searches for current events and breaking news

- Access up-to-date information on markets, weather, sports, and current affairs

- Integrate with various APIs for enhanced capabilities (calendars, databases, custom services)

- Maintain complete privacy with local processing and optional self-hosted options

MCP preserves the benefits of local model's privacy, cost savings, and control, while adding dynamic information access that bridges the gap with cloud-based solutions.

LM Studio MCP Support: What You Need to Know

LM Studio introduced MCP support starting with version 0.3.17, making it one of the most user-friendly platforms for running MCP servers with local LLMs. With the release of version 0.3.29, LM Studio now offers both GUI-based MCP integration and OpenAI-compatible API endpoints for developers. The platform supports both local and remote MCP servers and follows Cursor's mcp.json notation for configuration.

Key Requirements

- LM Studio version: 0.3.17 or higher for GUI, 0.3.29+ for API support (check Help → About in the interface)

- Node.js: Required for MCP servers (npm commands)

- Function calling: Model must support tool use/function calling

- Access mode: Power User or Developer mode enabled

What MCP Capabilities Unlock

Once configured, your model can automatically trigger web search tools in response to queries requiring current information. The model receives search results as context and integrates them into natural responses, creating seamless interactions that feel like the AI has "real-time awareness."

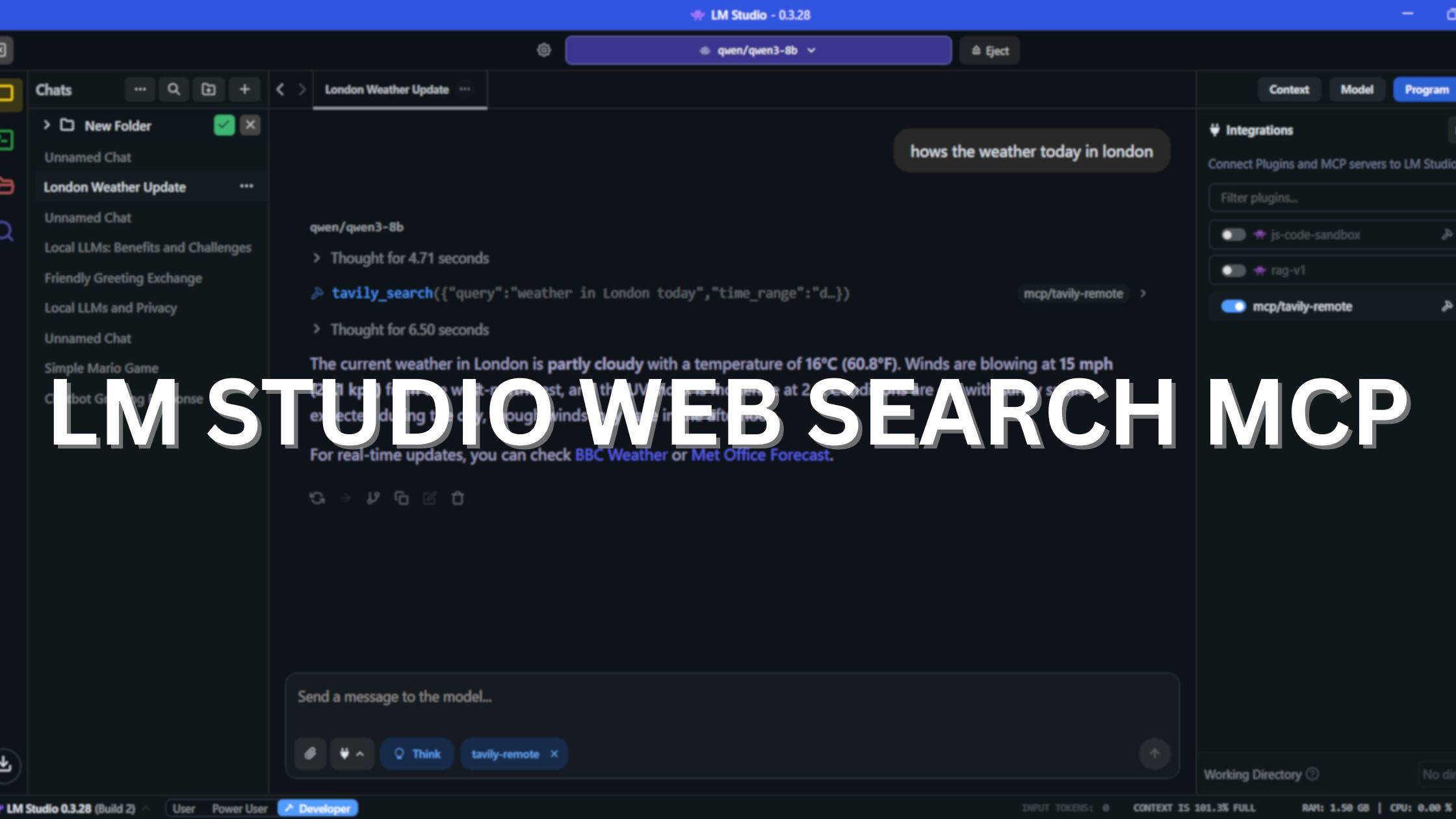

LM Studio Tavily MCP Integration (GUI) for Beginners

Based on successful real-world implementation, here's the exact step-by-step process to get Tavily web search working in LM Studio's graphical interface. Tavily offers the easiest entry point with excellent search quality and generous free tier. You can watch the video for step by step beginner friendly tutorial or continue refering the blog post.

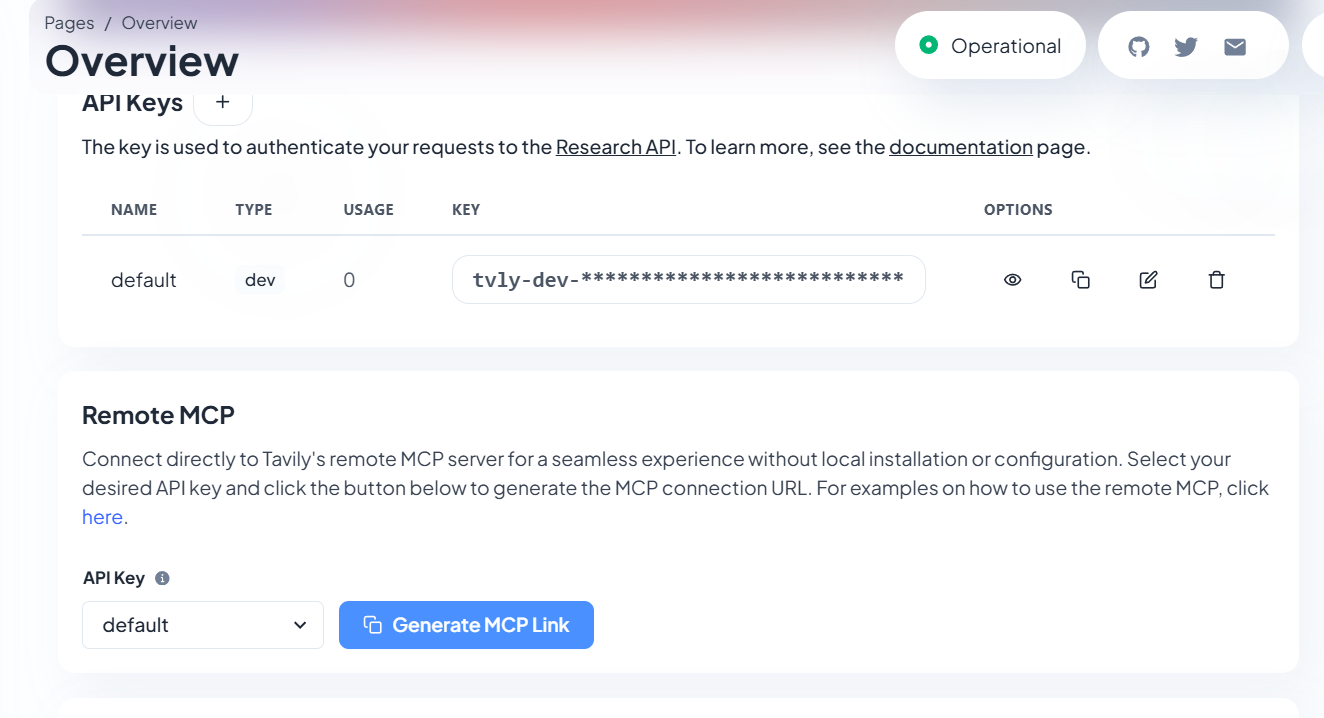

Step 1: Sign Up for Tavily API

Visit https://app.tavily.com/home and create your free account. The signup process requires only an email address - no credit card needed.

Pro Tip: Click the "Generate MCP Link" button in your dashboard once logged in. This provides the exact URL you need for LM Studio configuration. We will use this in later in the step 4.

Tavily Free Tier Benefits:

- 1,000 free API credits monthly

- No payment details required

- Production-ready search API

- Specialized search capabilities (news, code, images supported)

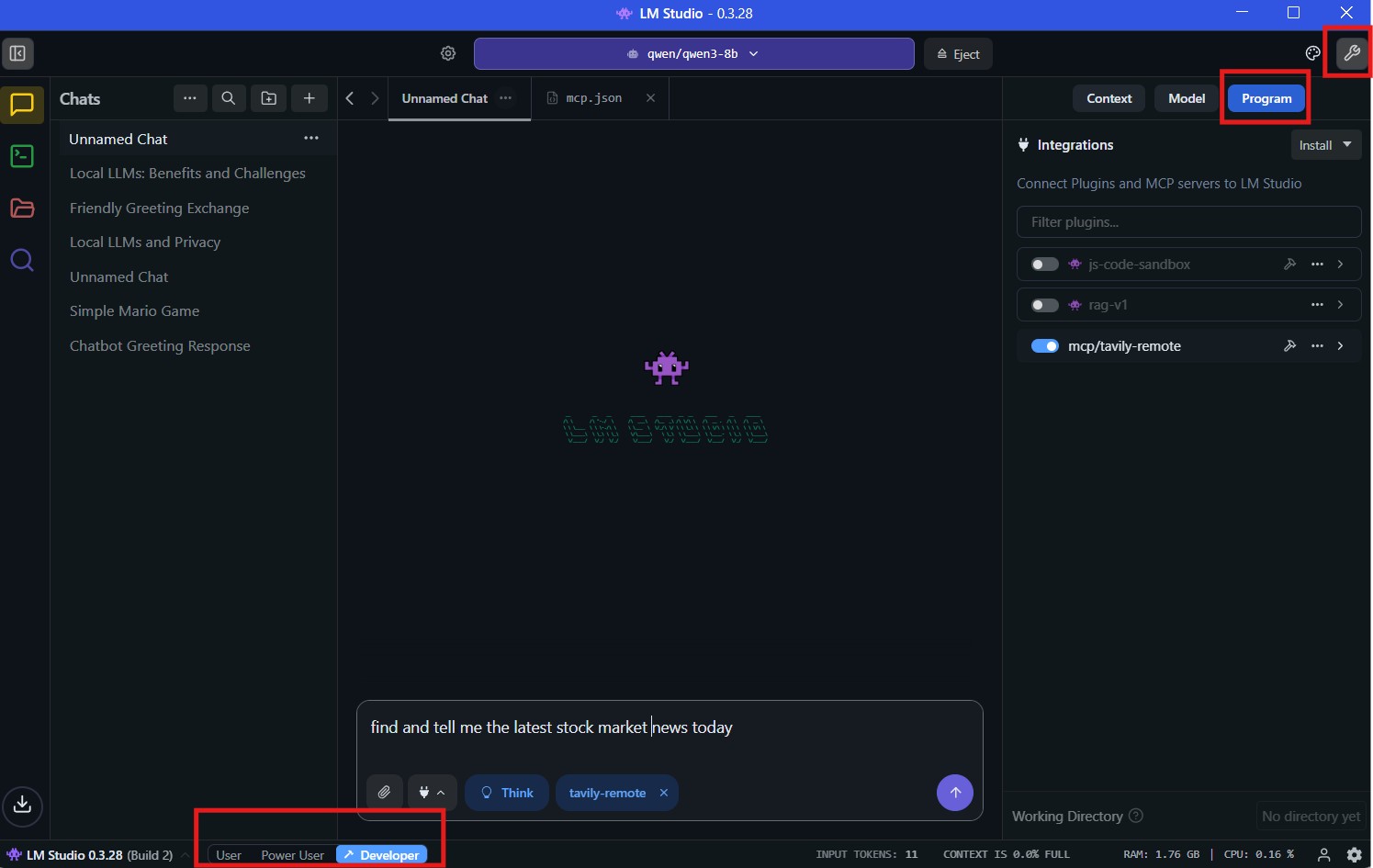

Step 2: Navigate to LM Studio MCP Settings

Launch LM Studio and ensure you're running version 0.3.17+. Switch to Power User or Developer mode at the bottom of the interface. These modes unlock advanced features including MCP configuration.

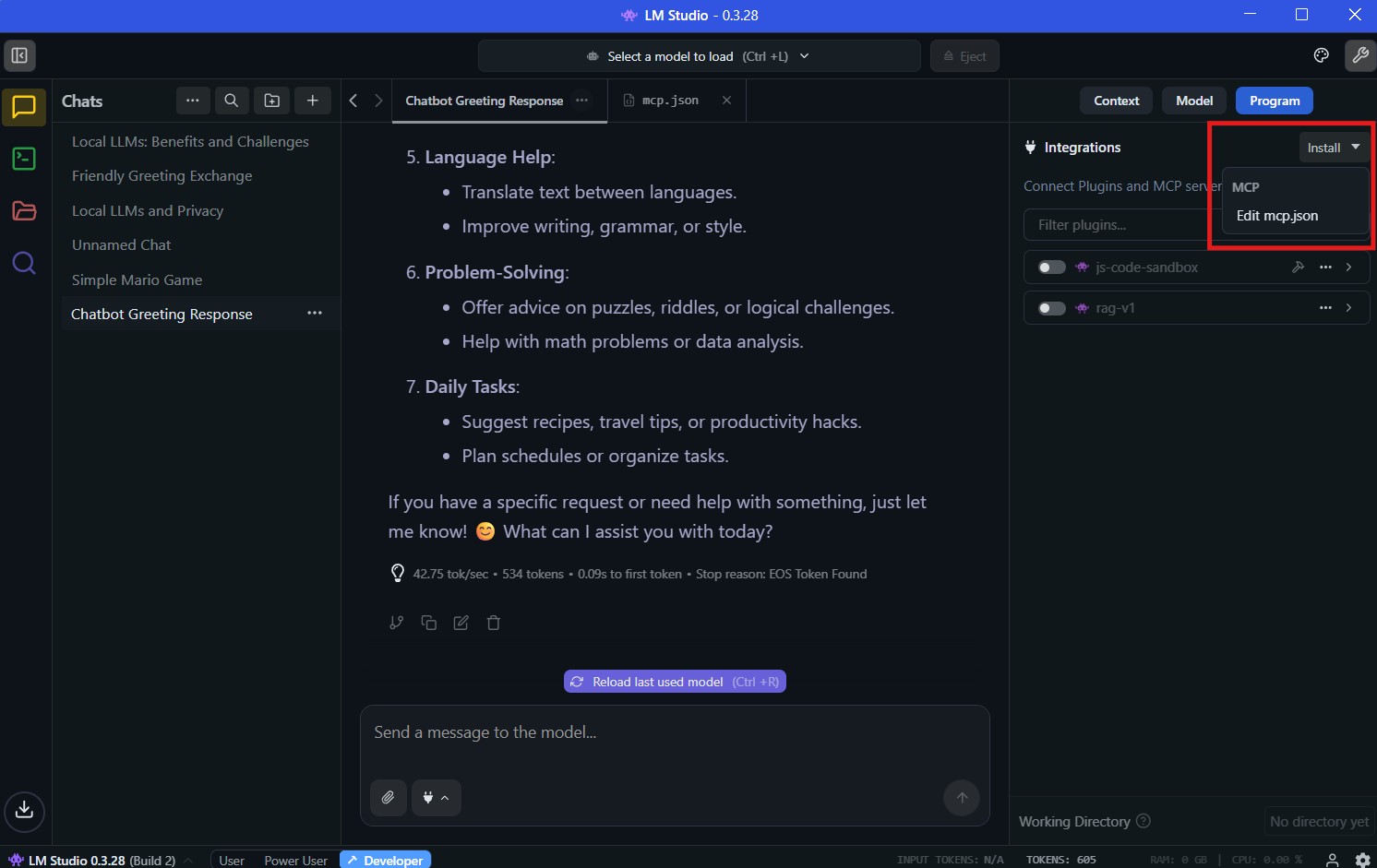

Click the settings spanner icon (🔧) in the top-right corner, then navigate to the "Program" tab in the right sidebar.

Step 3: Access MCP Configuration

Click the "Install" button in the Program tab. You'll see a security warning: "MCPs are useful, but they can be dangerous" with a brief explanation of what MCPs can do.

Click "Got it" to acknowledge the warning and proceed with "Edit mcp.json". This opens the configuration file where you'll add your MCP server settings.

Step 4: Configure Tavily MCP Server

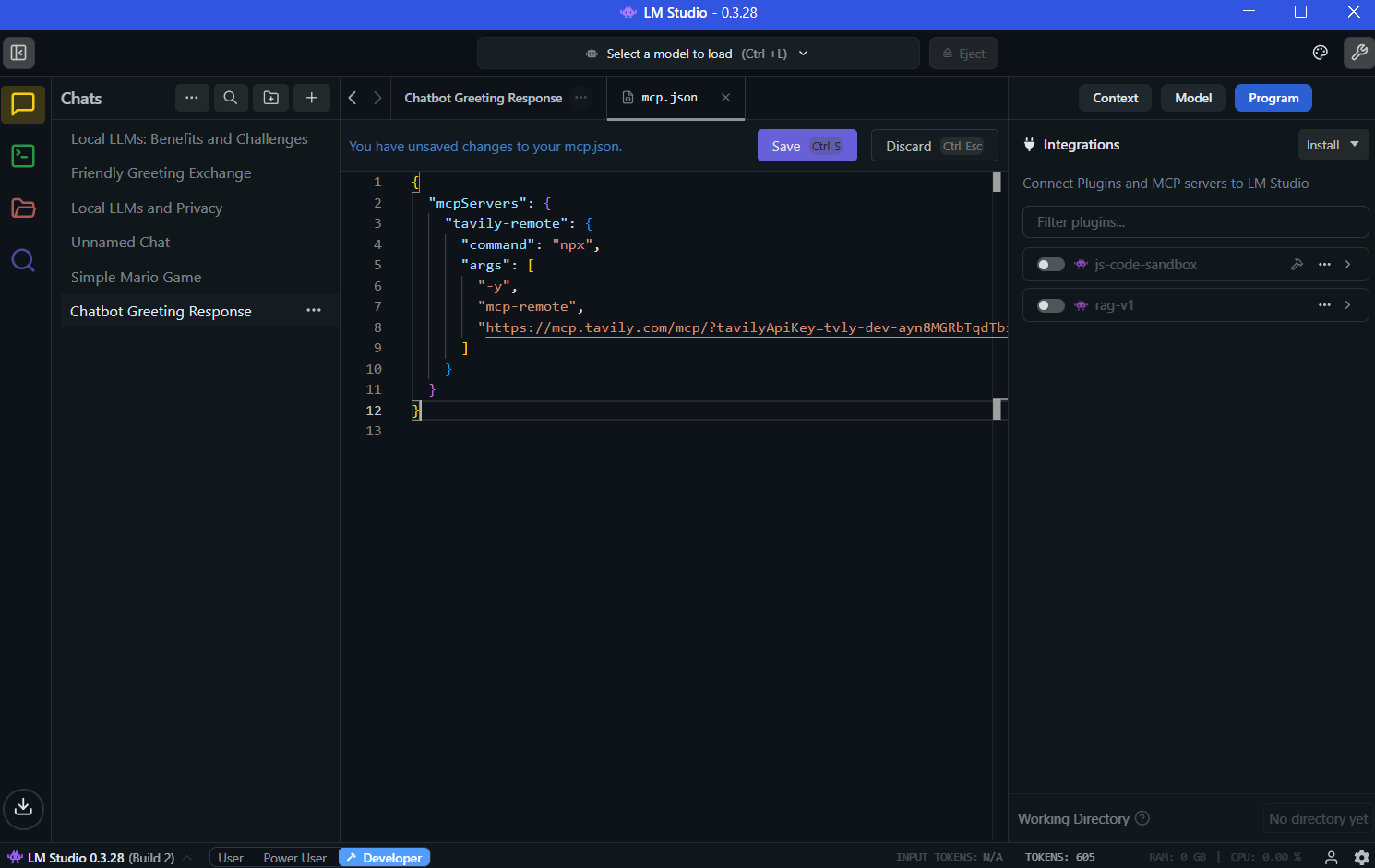

Replace the contents of the mcp.json file with the following configuration:

{

"mcpServers": {

"tavily-remote": {

"command": "npx",

"args": [

"-y",

"mcp-remote",

"https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_API_KEY_HERE"

]

}

}

}

Critical: Replace YOUR_API_KEY_HERE with the actual API key from your Tavily MCP link. Keep the entire URL structure intact.

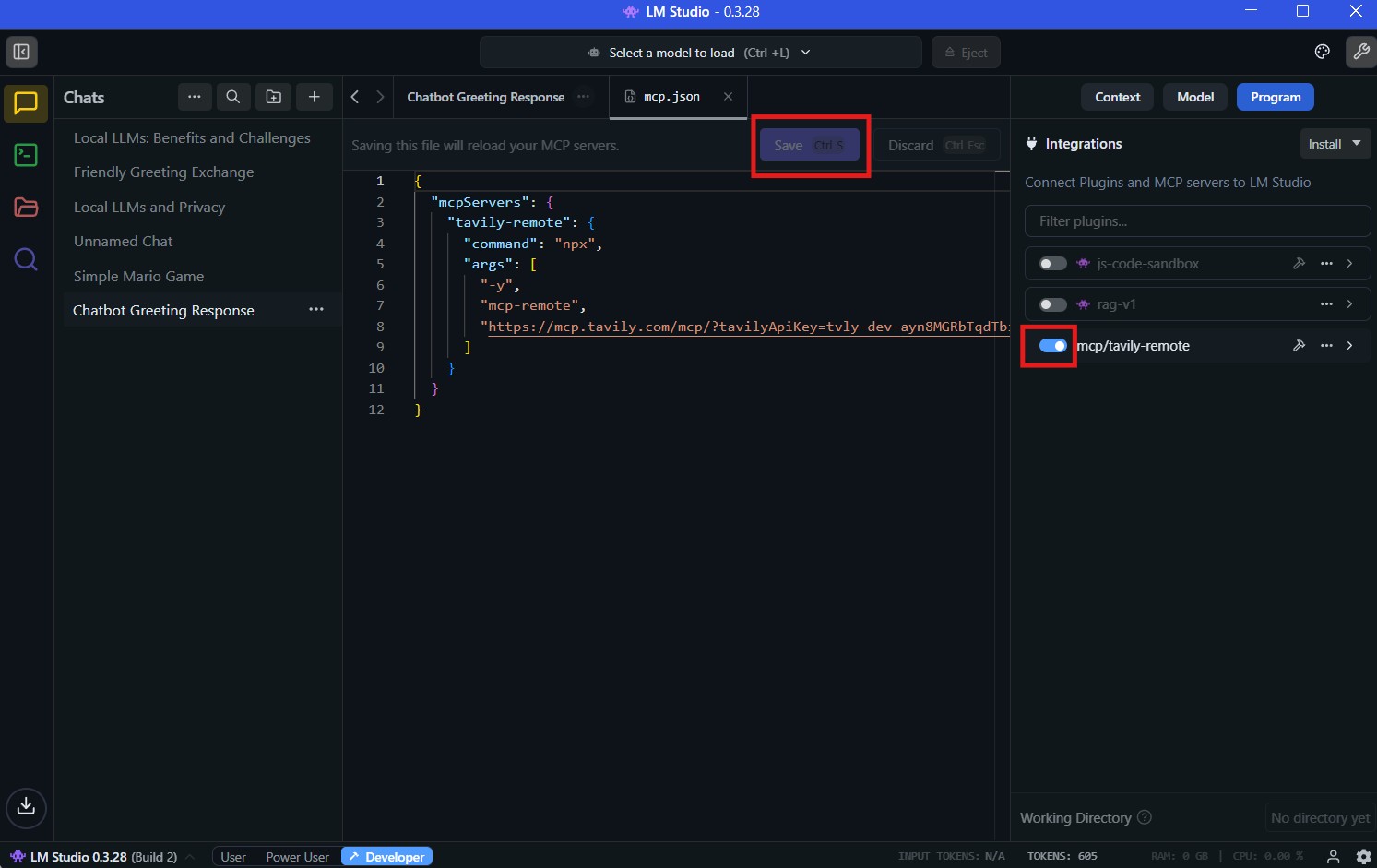

Step 5: Save and Enable

Click "Save" to write your changes to the mcp.json file. Return to the Program tab in settings, where you should now see "mcp/tavily-remote" listed in the available MCP servers.

Toggle the switch to "On" to enable the Tavily MCP server. LM Studio will attempt to launch the server using Node.js.

If you click on the dropdown action button towards the right of the Tavily MCP toggle, you'll see all these tools that the Tavily MCP offers:

- tavily_search: Performs general web searches to find relevant information

- tavily_extract: Extracts specific content and data from web pages

- tavily_crawl: Crawls websites to gather comprehensive data and content

- tavily_map: Structures and organizes search results into actionable insights

These tools enable sophisticated web research capabilities, allowing your model to access current information, analyze web content, and gather structured data from across the internet.

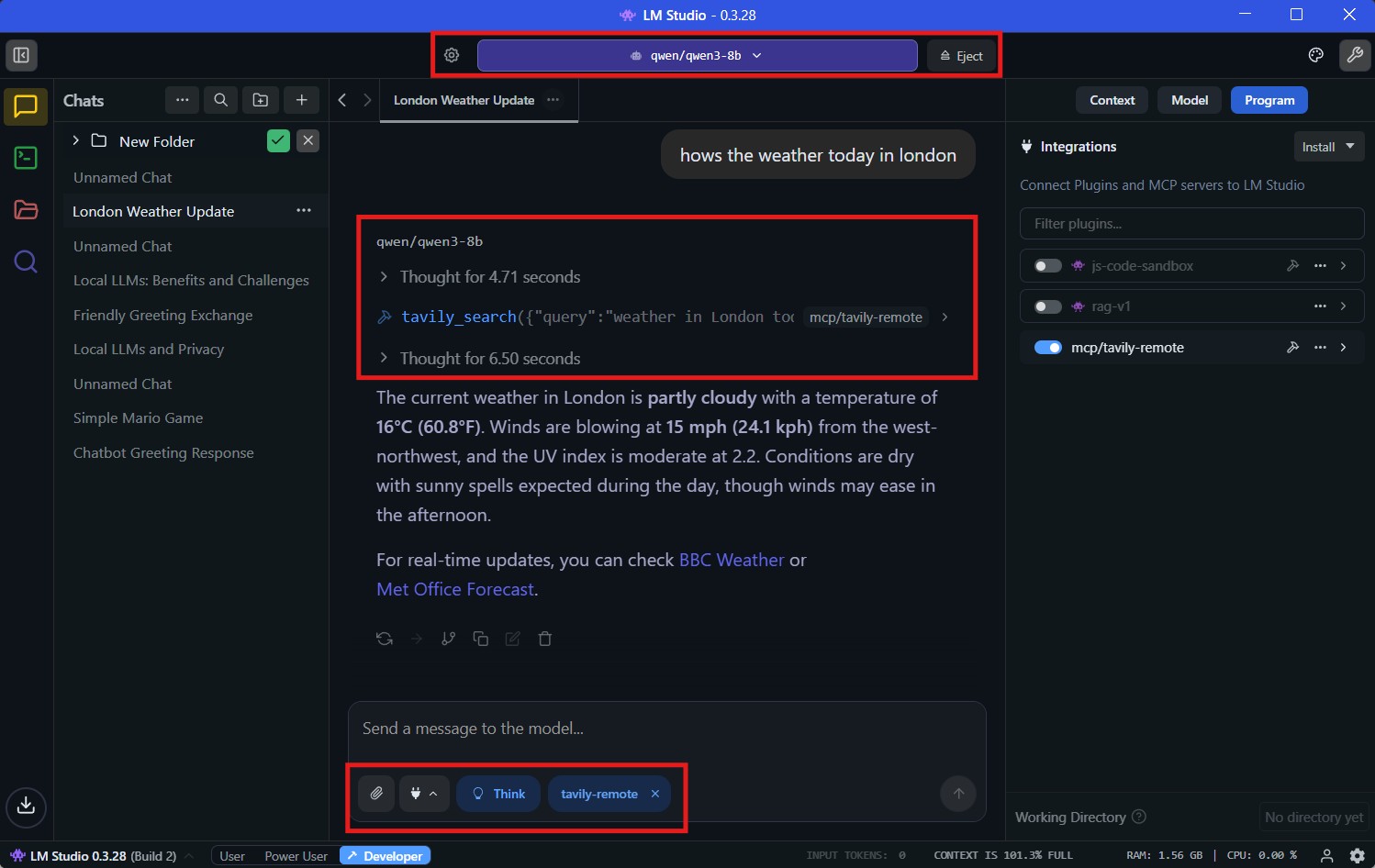

Step 6: Load Your Model and Test

To enable reliable tool use in LM Studio, choose a model explicitly trained for tools. Aim for at least a 7–8B parameter model. Thinking variants tend to handle tool calls more effectively.

Recommended Models

- Pick a tool-trained model: Qwen3 series, OpenAI's gptoss 20B, DeepSeek R1, granite-4-h-tiny, or Gemma3 series.

- Verify capabilities: In the model list, look for the tool icon (🔨) and reasoning icon (🧠) next to the model name.

- For systems with 8GB VRAM: Check out our curated list of best local LLMs for 8GB VRAM in 2025.

- Use fresh context: Start a new chat after loading the model to avoid contamination from previous conversations.

Direct action, minimal friction: Select the right model, confirm tool and reasoning flags, and begin in a clean session.

At the bottom of the chat interface, ensure "tavily-remote" is selected as the active MCP server.

Test with a query that clearly requires current information:

"London weather updates for today"

"Search for information about recent smartphone releases"

"Who won the Nobel Prize in Physics this year?"

The model should automatically trigger web search and provide responses enriched with real-time data.

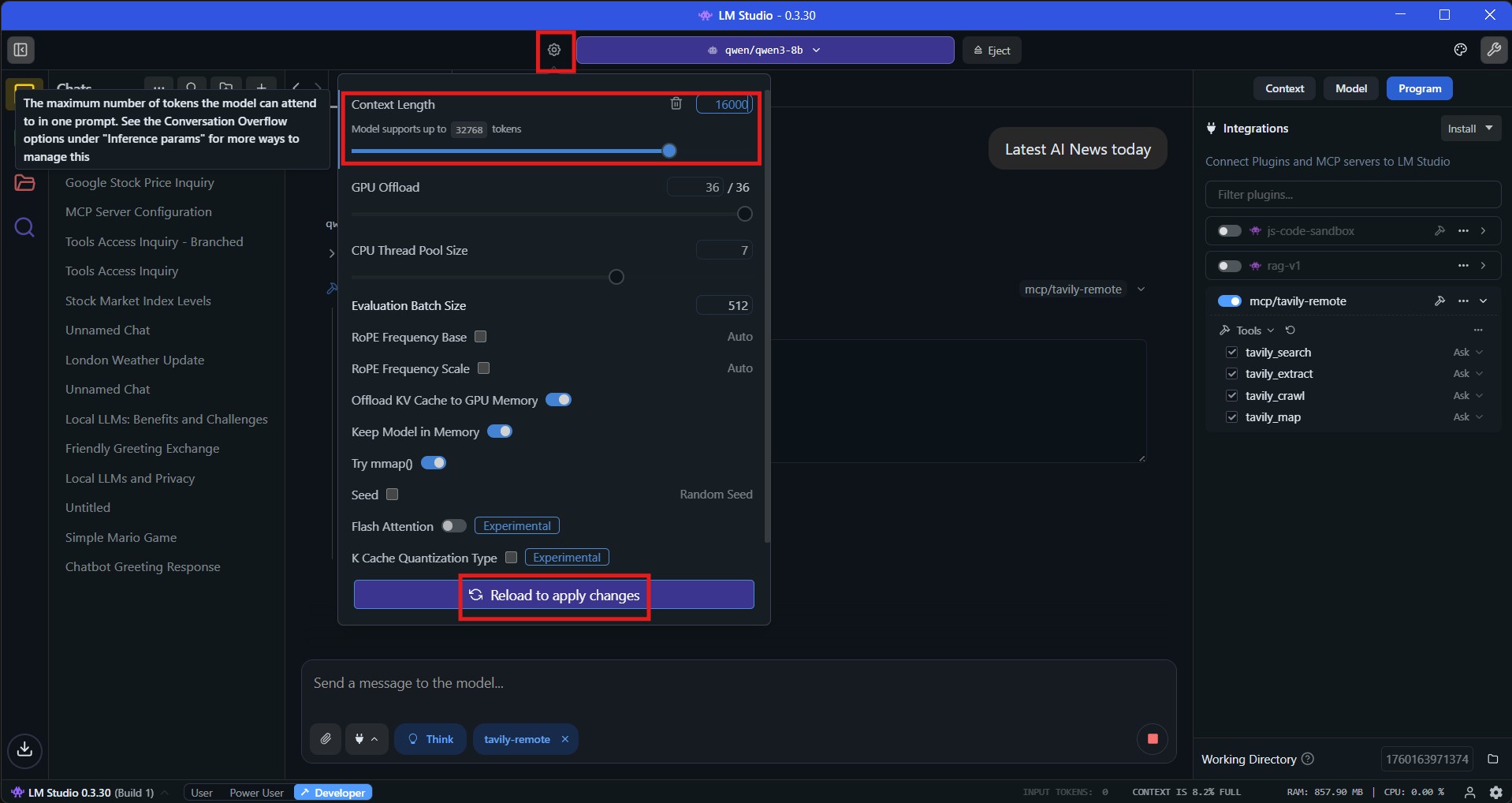

Important Note : By default, LM studio will load the model with a smaller context window of about 4k tokens, however the model will need longer context to work properly with MCP tools especially for complex queries.

How to increase context length in LM Studuo?

Click the gear icon next to the model selector and adjust the context length slider. I suggest setting it to at least 16k. 8k context window will also work for basic queries with web search MCPs. However, if you have a GPU with 8 GB of VRAM or lower, the throughput and latency of an 8 billion parameter model such as Qwen 3 will take a hit as you increase the context window. The bottleneck becomes the VRAM capacity: as context length increases, the KV cache grows linearly and consumes more memory.

When VRAM is exhausted, both the KV cache and model layers may be offloaded to system RAM and processed by the CPU, causing severe performance degradation. Finding the right balance of context window While a longer context length may slow the model slightly, it allows it to handle much more information from web searches, especially required for reasoning models, since reasoning tokens can quickly consume context space quickly. For comprehensive guidance on increasing context length in LM Studio, including multiple methods for different skill levels and detailed troubleshooting, see our complete How to Increase Context Length in LM Studio guide. Use our Interactive VRAM Calculator to find the optimal balance for your specific GPU, model, and context requirements. For detailed VRAM management strategies in LM Studio, check out our comprehensive LM Studio VRAM requirements guide for local LLMs.

LM Studio Tavily MCP Integration (OpenAI Compatible Responses API Endpoints) for Developers

With LM Studio 0.3.29 and later, developers can now integrate MCP servers through OpenAI-compatible API endpoints using the /v1/responses API. This approach is perfect for building applications, automation scripts, or integrating LM Studio into existing workflows. Let's learn how to integrate web search mcp through OpenAI-compatible on LM studio, start the LM Studio development server and make api calls. You can also watch the video for step by step tutorial or keep reading:

Understanding the /v1/responses API

The new responses API brings powerful features to LM Studio:

- Stateful interactions: Pass a previous_response_id to continue conversations without managing message history manually

- Custom function tool calling: Use your own function tools similar to v1/chat/completions

- Remote MCP support: Enable models to call tools from remote MCP servers

- Reasoning support: Parse reasoning output and control effort levels

- Streaming or sync: Choose between real-time SSE events or single JSON responses

Prerequisites for API Integration

Before using the API endpoints, ensure:

- LM Studio 0.3.29+ is installed

- Tavily API key from your dashboard (same as GUI method)

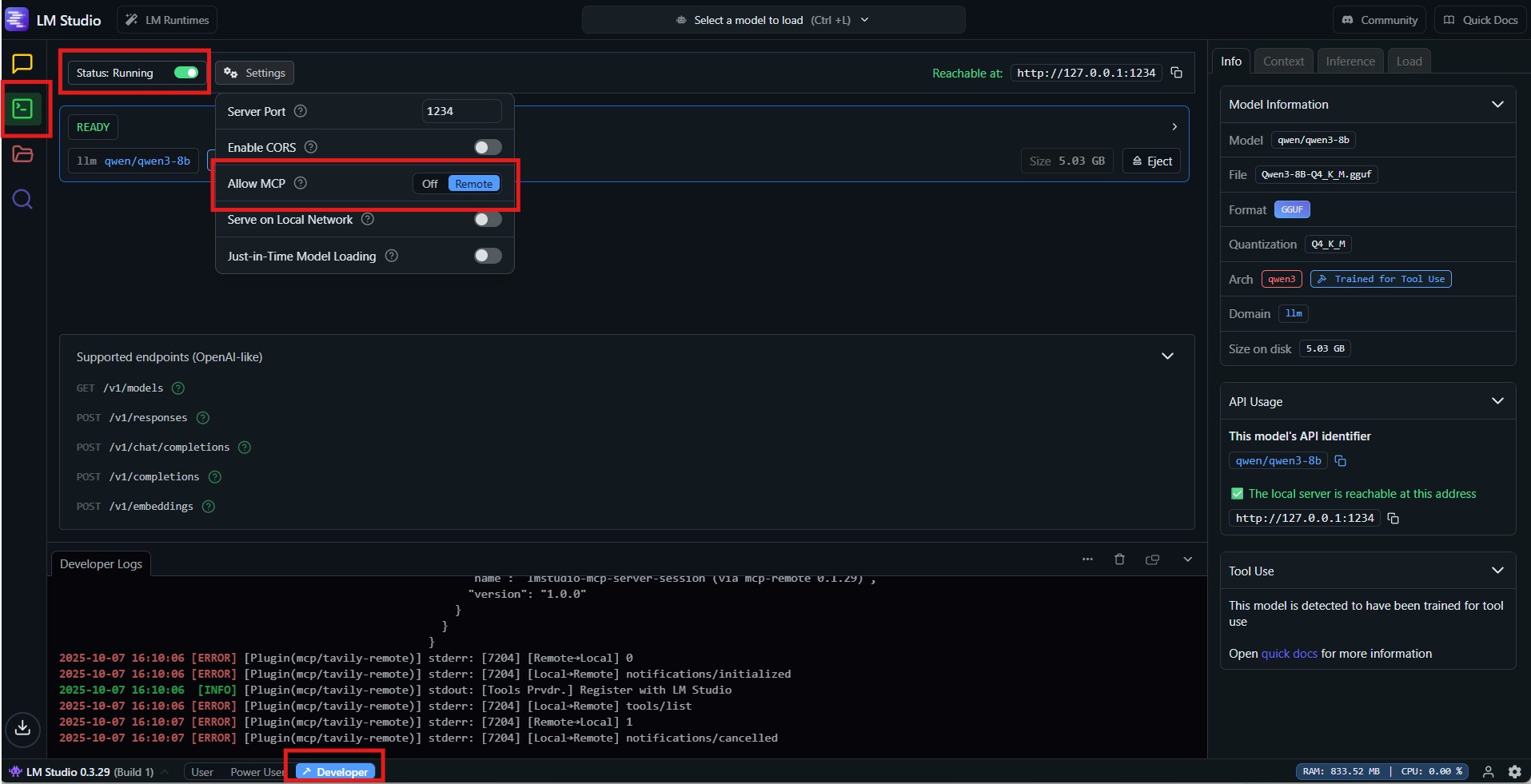

Step 1: Start LM Studio Server and Enable Remote MCP

First, ensure LM Studio is running a local server for API endpoints:

Go to Developer mode (bottom of interface)

Navigate to Settings → Server

Toggle "Status" to "Running" (this starts the server on http://127.0.0.1:1234)

Alternatively, you can start the server from command line:

lms server start

For security reasons, remote MCP servers must be explicitly enabled:

In Developer → Settings

Find Allow MCP section

Toggle Remote to "On"

These settings enable the API endpoints and allow connections to external MCP servers like Tavily.

Step 2: Basic API Request with Tavily MCP

Here's a simple example using curl to make a web search request:

curl http://127.0.0.1:1234/v1/responses \

-H "Content-Type: application/json" \

-d '{

"model": "qwen/qwen3-8b",

"tools": [{

"type": "mcp",

"server_label": "tavily",

"server_url": "https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_API_KEY_HERE",

"allowed_tools": ["tavily_search"]

}],

"input": "What are the latest developments in AI this week?"

}'

Important: Replace YOUR_API_KEY_HERE with your actual Tavily API key. The server_label can be any identifier you choose, and allowed_tools specifies which MCP tools the model can access.

Step 3: Stateful Conversations

One of the most powerful features is maintaining conversation context without manually managing message history. This is the bread and butter of building conversational AI applications.

Initial request:

curl http://127.0.0.1:1234/v1/responses \

-H "Content-Type: application/json" \

-d '{

"model": "qwen/qwen3-8b",

"tools": [{

"type": "mcp",

"server_label": "tavily",

"server_url": "https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_KEY",

"allowed_tools": ["tavily_search"]

}],

"input": "Search for the current weather in London"

}'

Response (shortened):

{

"id": "resp_abc123",

"output": [

{

"type": "message",

"content": "The current weather in London is..."

}

]

}

Continue the conversation by referencing the previous response:

curl http://127.0.0.1:1234/v1/responses \

-H "Content-Type: application/json" \

-d '{

"model": "qwen/qwen3-8b",

"input": "How does that compare to Paris?",

"previous_response_id": "resp_abc123"

}'

LM Studio automatically maintains context from the previous interaction, including the search results and conversation history.

Step 4: Streaming Responses

For real-time applications, enable streaming to receive events as they happen:

curl http://127.0.0.1:1234/v1/responses \

-H "Content-Type: application/json" \

-d '{

"model": "qwen/qwen3-8b",

"tools": [{

"type": "mcp",

"server_label": "tavily",

"server_url": "https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_KEY",

"allowed_tools": ["tavily_search"]

}],

"input": "Latest tech news today",

"stream": true

}'

You'll receive Server-Sent Events (SSE) including:

- response.created: When the response begins

- response.output_text.delta: Chunks of text as generated

- response.completed: When generation finishes

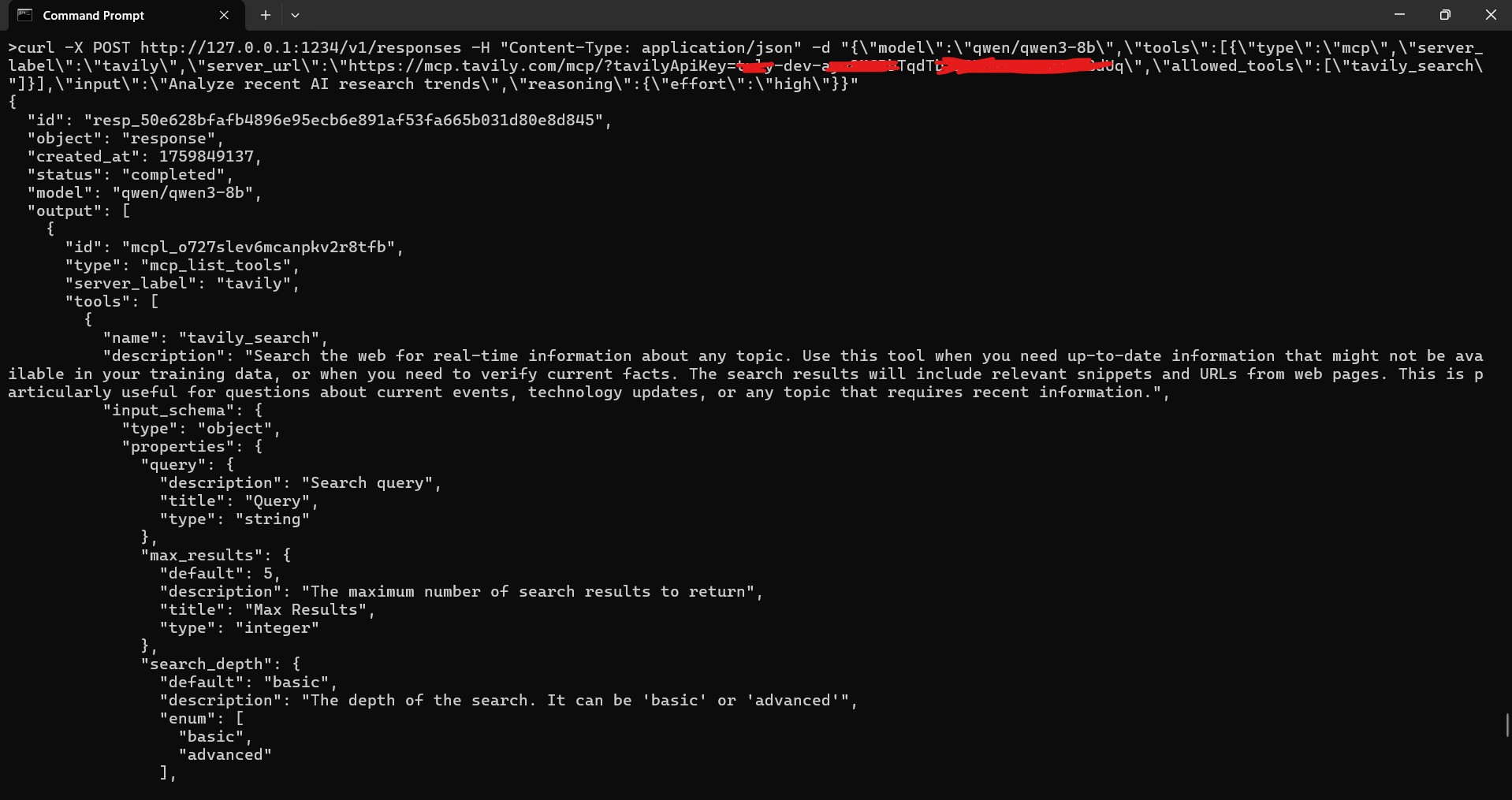

Step 5: Reasoning Support

For models that support reasoning (like qwen/qwen3-8b), you can control the reasoning effort:

curl http://127.0.0.1:1234/v1/responses \

-H "Content-Type: application/json" \

-d '{

"model": "qwen/qwen3-8b",

"tools": [{

"type": "mcp",

"server_label": "tavily",

"server_url": "https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_KEY",

"allowed_tools": ["tavily_search"]

}],

"input": "Analyze recent AI research trends",

"reasoning": {

"effort": "high"

}

}'

Available effort levels: "low", "medium", or "high". Higher effort provides more thorough reasoning at the cost of longer processing time.

Fig: LM Studio API Response - Curl command output showing high-effort reasoning with MCP web search integration via Tavily Search API.

Use the exact command below to reproduce this:

curl -X POST http://127.0.0.1:1234/v1/responses -H "Content-Type: application/json" -d "{\"model\":\"qwen/qwen3-8b\",\"tools\":[{\"type\":\"mcp\",\"server_label\":\"tavily\",\"server_url\":\"https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_KEY\",\"allowed_tools\":[\"tavily_search\"]}],\"input\":\"Analyze recent AI research trends\",\"reasoning\":{\"effort\":\"high\"}}"

To replicate this, adjust the model and key according to your setup.

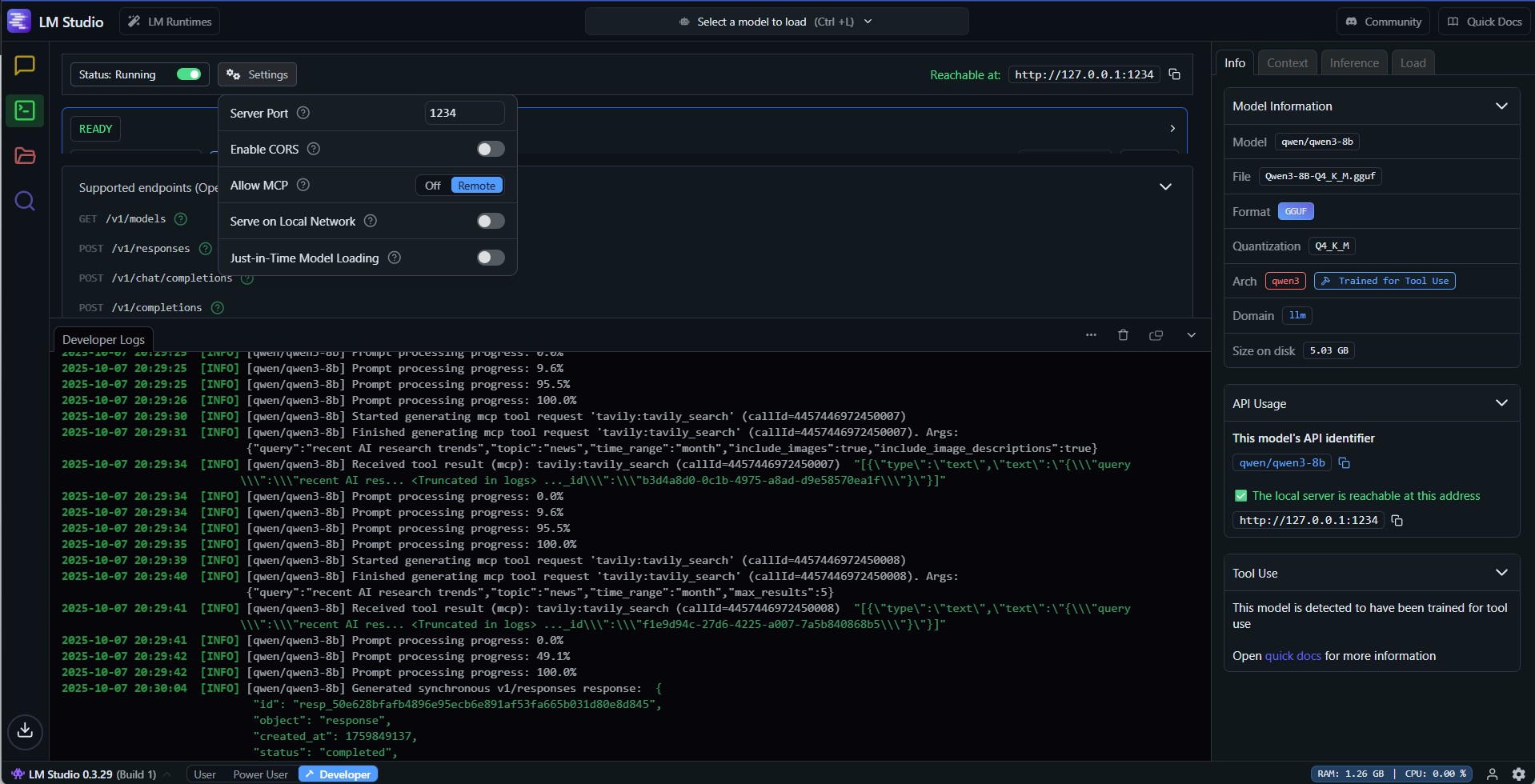

Fig: LM Studio Developer backend logs showing successful MCP server communication, web search execution, and reasoning process during the curl API call for AI research trends analysis.

API Integration Examples

Python example:

import requests

url = "http://127.0.0.1:1234/v1/responses"

headers = {"Content-Type": "application/json"}

data = {

"model": "qwen/qwen3-8b",

"tools": [{

"type": "mcp",

"server_label": "tavily",

"server_url": "https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_KEY",

"allowed_tools": ["tavily_search"]

}],

"input": "Tell me the current Microsoft stock price"

}

response = requests.post(url, json=data, headers=headers)

result = response.json()

print(result['output'])

Node.js example:

const axios = require('axios');

const response = await axios.post('http://127.0.0.1:1234/v1/responses', {

model: 'qwen/qwen3-8b',

tools: [{

type: 'mcp',

server_label: 'tavily',

server_url: 'https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_KEY',

allowed_tools: ['tavily_search']

}],

input: 'Latest sports scores'

});

console.log(response.data.output);

Important Note : By default, LM studio will load the model with a smaller context window of about 4k tokens, however the model will need longer context to work appropriately with MCPs especially for complex queries. Check out step 6 of the previous section.

API Documentation

For complete API specifications, response schemas, and additional examples, visit the official documentation at https://lmstudio.ai/docs/app/api/endpoints/openai

Brave Search MCP: A Solid Alternative

While Tavily offers the easiest setup, Brave Search MCP provides additional flexibility with higher free tier limits and independent search indexing. Brave Search is particularly appealing for users who want maximum privacy and comprehensive search capabilities.

Brave Search Advantages

- Higher free tier: 2,000 queries per month (vs Tavily's 1,000 credits)

- Independent search index: Over 30 billion pages with no dependency on other search engines

- Privacy-focused: No user profiling or tracking, built by the Brave Browser team

- Multiple search types: Web, local, news, images, and video search capabilities

Brave Search Limitations

- Payment details required: Even for the free tier (though no charges occur)

- Limited content extraction: Provides search snippets rather than full page content

Quick Brave Search Setup (GUI)

For those who prefer Brave Search, here's the configuration approach:

First, obtain your Brave Search API key from https://brave.com/search/api/. Create a free tier account to get your 2,000 monthly queries.

Your mcp.json configuration would look like:

{

"mcpServers": {

"brave-search": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-brave-search"

],

"env": {

"BRAVE_API_KEY": "your_brave_api_key_here"

}

}

}

}

Replace your_brave_api_key_here with your actual API key. The presence of an env section requires careful verification that the key is properly set.

Brave Search API Integration

Important: Unlike Tavily which provides a remote MCP server, Brave Search uses a subprocess-based MCP server that only works with LM Studio's GUI interface. For API integration, you need to deploy Brave Search as an HTTP/SSE MCP server using Docker.

GUI vs API Access Methods

- GUI: Can directly communicate with local MCP servers configured via command and args (subprocess-based)

- API: Requires MCP servers to be exposed as HTTP/SSE endpoints with a url field

The @modelcontextprotocol/server-brave-search configured with npx runs as a subprocess that only GUI can access. The /v1/responses endpoint cannot communicate with subprocess-based MCP servers, it needs an HTTP URL.

Solution: HTTP/SSE Brave Search MCP Server

Here's how to deploy Brave Search as an HTTP server that both GUI and API can access:

Step 1: Run Brave Search MCP as HTTP Server

docker run -d --rm \

-p 8080:8080 \

-e BRAVE_API_KEY="YOUR_API_KEY_HERE" \

-e PORT="8080" \

--name brave-search-server \

shoofio/brave-search-mcp-sse:latest

Step 2: Update mcp.json Configuration

Replace the existing brave-search configuration with:

{

"mcpServers": {

"brave-search": {

"url": "http://localhost:8080/sse"

}

}

}

Remove the old command and args fields completely.

Step 3: Restart LM Studio

Restart LM Studio to load the new configuration.

Step 4: Test the API Call

curl -X POST http://127.0.0.1:1234/v1/responses \

-H "Content-Type: application/json" \

-d '{

"model": "qwen/qwen3-8b",

"tools": [{

"type": "mcp",

"server_label": "brave-search",

"allowed_tools": ["brave_web_search"]

}],

"input": "Analyze recent AI research trends",

"reasoning": {

"effort": "high"

}

}'

Why This Works

- SSE Transport: The Docker container exposes Brave Search as an HTTP server using Server-Sent Events (SSE)

- URL Field: Adding the

urlfield tells LM Studio's API where to connect - Remote Mode: The "Remote" setting in LM Studio allows connections to HTTP-based MCP servers

Both the GUI chat interface and API endpoints can now access the same Brave Search server at http://localhost:8080/sse.

Alternative Method: Manual Installation

For users who prefer building from source or need more customization control, you can install and run the Brave Search MCP server manually:

Prerequisites

- Node.js 18+ and npm installed

- Git

- TypeScript compiler (if building from source)

Step 1: Clone and Setup the Repository

git clone <repository_url> # Replace with the actual repository URL (likely https://github.com/shoofio/brave-search-mcp-sse or similar)

cd brave-search-mcp-sse

Step 2: Install Dependencies

npm install

Step 3: Configure Environment Variables

Create a .env file in the root directory:

BRAVE_API_KEY=YOUR_API_KEY_HERE

PORT=8080

# LOG_LEVEL=debug # Optional: for debugging

Step 4: Build the Project

npm run build

Step 5: Start the Server

npm start

Step 6: Update mcp.json Configuration

After the server is running, update your mcp.json configuration the same way as the Docker method:

{

"mcpServers": {

"brave-search": {

"url": "http://localhost:8080/sse"

}

}

}

Step 7: Restart LM Studio

Restart LM Studio to load the new configuration.

Development Option

For development with auto-reloading, if the package.json includes development scripts:

npm run dev

This manual installation gives you full control over the server code and allows for easier debugging and customization compared to the Docker approach.

Troubleshooting Common Issues

Based on community reports and our testing, here are the most frequent problems and their solutions:

MCP Server Won't Start

Symptoms: Server shows as gray/inactive in LM Studio settings

Solutions:

- Verify Node.js installation: Run

node --versionandnpm --versionin terminal - Check Power User/Developer mode: MCP configuration only appears in these modes

- Validate API key formatting: Ensure no extra spaces or characters in your mcp.json

- Test server manually: Run the command from mcp.json in terminal to check for errors

Model Not Using Search Function

Symptoms: Model responds from training data instead of triggering web search

Solutions:

- Confirm function calling support: Look for tool/function icon next to model name

- Check MCP server selection: Ensure correct server selected at the bottom of chat (GUI) or properly configured in API request

- Phrase queries clearly: Use explicit questions requiring current info ("What is today's date?")

- Select a good model: Smaller models might struggle with using the MCP

API-Specific Issues

Symptoms: API requests return errors or don't use MCP tools

Solutions:

- Verify server is running: Check LM Studio Developer → Server status

- Enable remote MCP: Must be toggled on in Developer → Settings

- Check port availability: Default is 1234, ensure it's not blocked

- Validate JSON syntax: Malformed requests will fail silently

- Review allowed_tools: Ensure tool names match exactly

Timeout Errors

Symptoms: Search requests fail with timeout messages

Solutions:

- Check internet connectivity: MCP servers require external API access

- Verify API key validity: Expired or incorrect keys cause connection failures

- Monitor rate limits: Free tiers have monthly limits - check your usage

Search Results Not Appearing

Symptoms: Model "thinks" about searching but doesn't return results

Solutions:

- Enable one MCP server only: Multiple active servers can cause conflicts

- Restart after configuration: MCP changes require LM Studio restart

- Test with simple queries: Start with simple prompts like "What's the weather today in New York" to verify functionality

Performance Optimization Tips

Maximize your MCP web search experience with these optimization strategies:

Query Optimization Strategies

- Be specific: "Current Microsoft stock price" works better than "Microsoft stock info"

- Trigger intent: Use words like "latest," "currently," "recent" to encourage searches

- Combined queries: Request multiple related searches in one prompt for efficiency

Rate Limit Management

- Tavily: 1,000 credits/month = ~33 searches/day (free tier)

- Brave: 2,000 queries/month = ~67 searches/day (free tier)

- Monitor usage: Set up alerts when approaching limits

- Batch queries: Combine multiple questions in single conversations when possible

Hardware Considerations

- Context window impact: Larger windows can accommodate more search results

- Memory management: Search results consume context, balance with conversation history

- For the best GPUs for LLM inference in 2025, see our detailed GPU guide for local LLM inference in 2025.

Advanced MCP Web Search Options

Beyond Tavily and Brave Search, the MCP ecosystem offers several other web search solutions for specialized use cases:

Self-Hosted Options

Web Search MCP Server: A TypeScript-based solution supporting multiple search engines with full configurability.

{

"mcpServers": {

"web-search": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-web-search"

],

"env": {

"SEARCH_API_KEY": "your_api_key"

}

}

}

}

mcp-server-fetch: Lightweight approach for URL-based content extraction and data retrieval.

Enterprise Solutions

Perplexity MCP: Combines professional search with AI summarization for research-intensive applications.

Custom implementations: Build your own MCP server connecting to specialized databases, internal wikis, or proprietary search systems.

Multiple MCP Server Configuration

Run different servers for different purposes:

{

"mcpServers": {

"tavily-search": {

"command": "npx",

"args": ["-y", "mcp-remote", "https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_KEY"]

},

"brave-news": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-brave-search"],

"env": {"BRAVE_API_KEY": "YOUR_KEY"}

},

"local-db": {

"command": "npx",

"args": ["-y", "@your-org/custom-mcp-server"]

}

}

}

Conclusion

Setting up web search in LM Studio through MCP servers transforms your local LLM from a static knowledge base into a dynamic research assistant. Whether you're a beginner using the GUI or a developer integrating via API, the process becomes straightforward with the right configuration, maintaining complete privacy and control over your AI interactions.

Key Takeaways

- For beginners: The GUI method with Tavily offers the easiest setup with excellent free tier benefits and no payment details required

- For developers: The /v1/responses API provides powerful stateful interactions, streaming, and programmatic integration capabilities

- Alternative option: Brave Search provides more queries and independent indexing for users prioritizing privacy

- Critical requirement: Always verify your model's function calling support before troubleshooting search issues

Start with the method that suits your needs. GUI for quick experimentation or API for production applications. Either way, you'll be amazed at how much more capable your local LLM becomes with real-time web access!

Ready to Get Started?

Download LM Studio 0.3.29+, create your Tavily account, and follow the appropriate tutorial above. Within minutes, you'll have transformed your local AI assistant into a research powerhouse with either GUI-based interactions or programmatic API control.

FAQ

Do I need to install anything special to use MCP in LM Studio?

You need LM Studio version 0.3.17 or higher (0.3.29+ for API features), Node.js installed on your system, and a model that supports function calling/tool use. Power User or Developer mode must be enabled to access MCP configuration.

What's the difference between Tavily and Brave Search MCP options?

Tavily offers easier setup (no payment details required) with 1,000 credits/month and specialized search capabilities. Brave Search provides 2,000 queries/month and independent indexing but requires payment information.

Are MCP tools safe to use?

While MCPs are powerful, they can potentially access external services. LM Studio warns users and requires explicit acknowledgment. Stick to reputable providers like Tavily and Brave Search, and review what services you're connecting to.

Should I use the GUI method or the API method?

Use the GUI method if you're just getting started or want to test web search interactively in the LM Studio chat interface. Choose the API method if you're building applications, automation scripts, or need programmatic control over MCP interactions.

Why isn't my model using the web search function in LM Studio?

Ensure your model supports function calling (look for the tool icon in LM Studio), the MCP server is enabled and selected at the bottom of chats (GUI) or properly configured in your API request, and your queries clearly require current information. Some models need specific prompting to trigger tool use.

What local LLM models work best with MCP web search?

Qwen3 and Deepseek R1 series excel at function calling, GPT OSS provides strong tool use capabilities, Mistral models offer good performance balance. Pick models with >=8b parameters. Generally, models released after 2024 onwards with function calling support work well with MCP.

Can I use multiple MCP servers simultaneously?

Yes, you can configure multiple MCP servers in your mcp.json file. In the GUI, LM Studio will show them all in the interface, but you should select only the one you need for each conversation to avoid confusion. With the API, you can specify multiple tools in a single request.

What's the benefit of stateful interactions in the API?

Stateful interactions allow you to continue conversations by referencing a previous_response_id without manually managing the entire message history. This significantly simplifies building conversational applications and reduces the amount of data you need to send with each request.