How to Run a Local LLM on Windows in 2025

Want ChatGPT-style AI that runs entirely on your PC? Private, fast, and available offline? If you're new to this, start with our guide on what is a local LLM to understand the basics. This practical guide walks you through the best options for Windows (from one-click apps to power-user toolchains), the hardware you really need, and the exact commands to get going.

TL;DR: Three easy paths

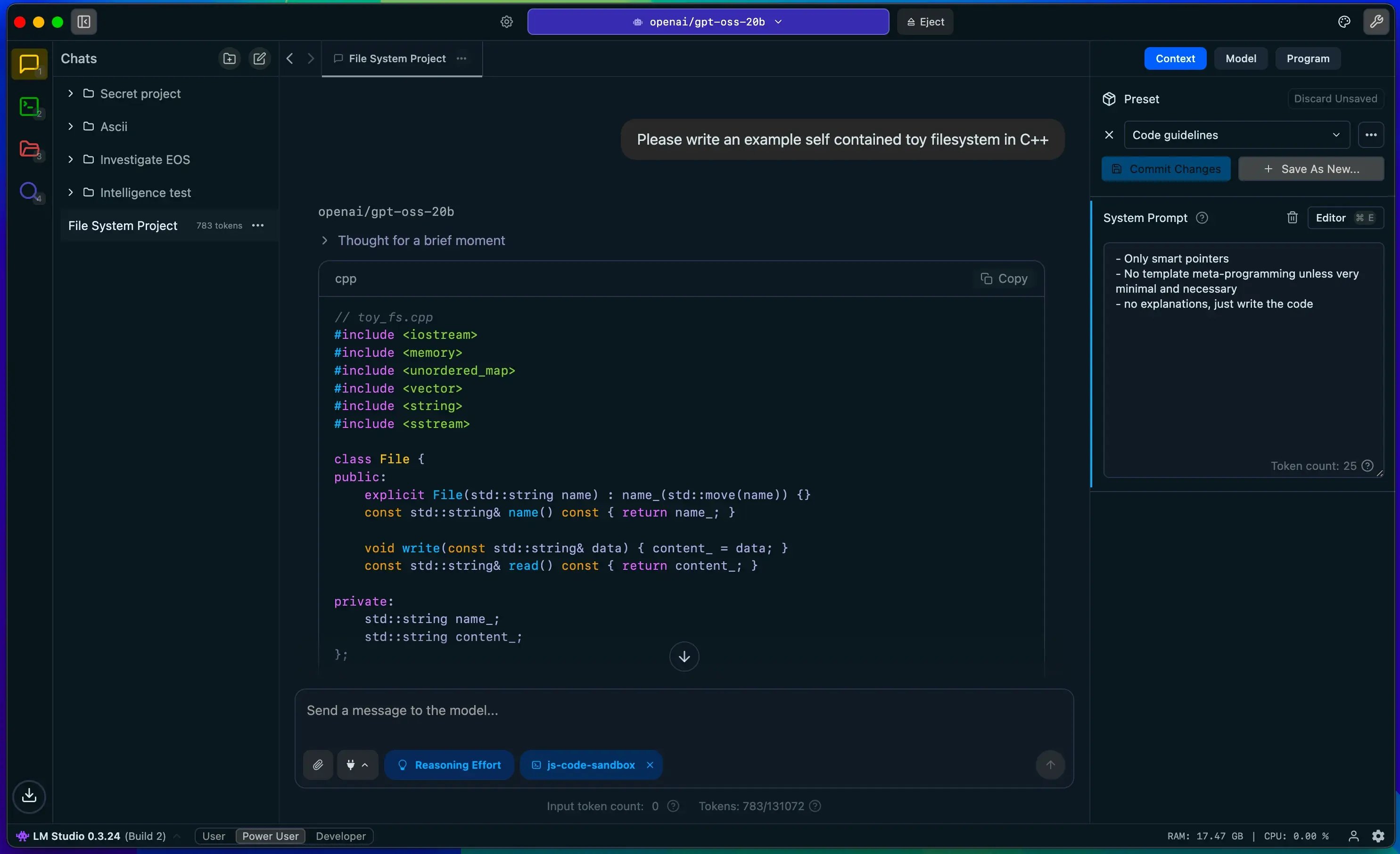

- LM Studio (easiest GUI): Download the Windows app, pick a model, click Run. Great if you want zero terminal commands and a polished chat UI—plus an optional local API for your own apps.

- Ollama (simple CLI + API): Install once, then

ollama run llama3.1and chat in your terminal or via an OpenAI-compatible local server athttp://localhost:11434. Add Open WebUI for a nice browser UI. - llama.cpp (advanced & ultra-portable): Use the ultra-lean C/C++ runtime for blazing-fast inference with GGUF models; perfect for tinkerers and fine-grained control. Pair with Text-Generation-WebUI or Open WebUI for a front-end.

What you’ll need (hardware & OS)

- Windows 11 or Windows 10 (recent builds).

- GPU VRAM matters most. Local LLMs are memory-hungry; enough VRAM prevents spillover into slower system RAM. A helpful rule of thumb: target ~1.2× the model’s on-disk size in available VRAM for smooth operation. If you only have 8–12 GB VRAM, choose 3B–7B models or use more aggressive quantization.

- No GPU? You can still run smaller models on CPU; it’s just slower. LM Studio and Ollama both support CPU fallback.

Quick picks by VRAM

- 8–12 GB: Qwen-2.5 3B/7B, Phi-4-Mini, Llama-3.x 1B–3B, Gemma-2 2B–9B (quantized)

- 16–24 GB: Llama-3.1 8B, Mistral-7B, Qwen-2.5 14B (quantized)

- 24 GB+: Larger 14B–32B models and generous context windows (still consider quantized builds)

For detailed GPU performance analysis and buying recommendations, check out our complete GPU guide for local LLM inference.

Option 1 - LM Studio (best “download & go” experience)

Why choose it: You get a clean Windows installer, a curated model browser (pulls from Hugging Face), a chat UI, prompt templates, and an optional local server that mimics the OpenAI API for your own apps.

Steps:

- Download and install LM Studio for Windows.

- Open Discover, search for a model (e.g., “llama”, “qwen”, “gemma”, “phi”), and click Download.

- Click Run. You can chat immediately; or enable the built-in server/API if you want to call it from scripts.

Tips:

- Start with a 3B–7B Instruct model for general chat.

- If responses are slow, try a smaller or more quantized build (e.g., Q4_0/Q4_K).

- Check LM Studio’s docs for system requirements and API usage.

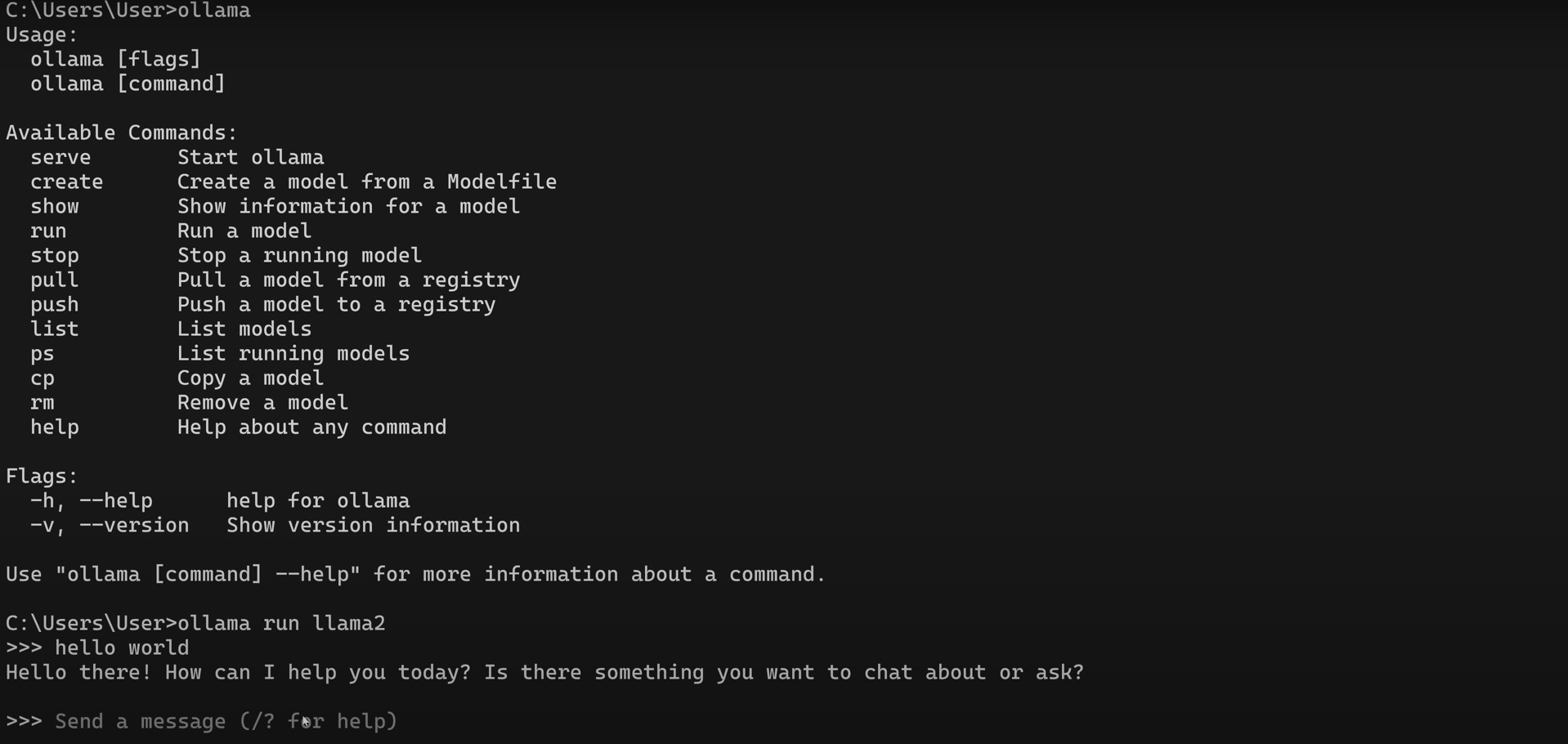

Option 2 - Ollama (simple CLI + REST API, great with web UIs)

Why choose it: Lightweight install, dead-simple commands, runs a local server at localhost:11434, and integrates nicely with community UIs like Open WebUI. For a deeper dive into other tools, check out our complete guide to Ollama alternatives.

Steps:

Install Ollama for Windows (no admin required; installs in your home folder).

Open Terminal (or PowerShell) and run a model, e.g.:

ollama run llama3.1You’ll get an interactive chat. Swap

llama3.1for other models (e.g.,mistral,qwen2.5,gemma2,phi4-mini). See model pages in the Ollama Library to browse options and tags.Use Ollama’s HTTP API at

http://localhost:11434from your apps, or connect a UI (next section).

Housekeeping: By default, models live under your user profile. If space is tight, the Windows docs explain changing binary/model locations.

Add a friendly UI: Open WebUI (works with Ollama)

If you prefer a browser UI with chats, histories, prompts and tools, Open WebUI is a popular choice and now installs cleanly via pip:

pip install open-webui

open-webui serve

Then point it at your running Ollama server and you’re set. The docs recommend Python 3.11 and show the exact commands.

Option 3 - llama.cpp (power-user route + ultimate portability)

Why choose it: Maximum control and minimal overhead. llama.cpp runs quantized GGUF models efficiently on Windows. You can use it via CLI or through front-ends like Text-Generation-WebUI or Open WebUI.

Key points & steps:

- Use GGUF models. llama.cpp requires models in the GGUF format (you can download GGUFs directly or convert from other formats if needed).

- Download a compatible model (from Hugging Face, etc.) and run with llama.cpp’s CLI tools; the README shows command examples and how to auto-download from hubs.

- Prefer a GUI? Text-Generation-WebUI offers a portable Windows build (download, unzip, run) and supports GGUF models.

GPU acceleration on Windows: what actually works in 2025

- DirectML via ONNX Runtime (cross-vendor): Microsoft’s DirectML Execution Provider in ONNX Runtime can accelerate inference on any DirectX 12-capable GPU (NVIDIA, AMD, Intel). If a tool you use supports ONNX/DirectML, you’ll get broad hardware coverage on Windows.

- NVIDIA CUDA (Linux toolchains on Windows): Some advanced frameworks remain Linux-first. If you want those with full CUDA speed, run them under WSL2 with NVIDIA’s CUDA driver for WSL2. It’s well-documented and supports popular CUDA libraries.

Bottom line: For mainstream Windows workflows, LM Studio and Ollama are easiest. If you need Linux-native stacks (TensorRT-LLM, bespoke pipelines), WSL2 + CUDA is the reliable path.

Picking the right model (and size)

- Start small, scale up: A good starter is a 3B–7B Instruct model (e.g., Qwen-2.5-Instruct 7B, Mistral-7B-Instruct, Phi-4-Mini). Move to Llama-3.1 8B or Gemma-2 9B if you have ≥16 GB VRAM. For coding-specific tasks, check out our guide to the best local LLMs for coding. Library pages for Llama-3.1 and other families, like the one in our DeepSeek-V3.1 review, list sizes/tags and are easily pulled by Ollama/LM Studio.

- Quantization matters: Smaller quantized builds (e.g., 4-bit GGUF variants) slash VRAM usage with minimal quality loss for casual chat. That’s why GGUF is so popular for local inference.

- Context window vs. speed: Long contexts need more memory. If responses get sluggish, reduce max tokens/context, or choose a more compact model. The VRAM-first guidance from recent Windows AI testing is a good compass.

Performance & stability tips

- Mind disk space: Models can be tens to hundreds of GB. Ollama’s Windows docs explain how to move binaries/models off C: to another drive.

- Stay within VRAM: If you’re stalling or paging, pick a smaller/quantized model or shorten prompts/context. Recent Windows tests show performance cliffs once you exceed VRAM.

- Prefer “Instruct” models for chat. “Base” models often need system prompts to behave conversationally.

- Use a proper power plan & updated GPU drivers. Prevent throttling and make sure DX12/CUDA stacks are current (especially if using WSL2 + CUDA).

Troubleshooting

- “Where did my models go?” On Ollama, they’re under your user directory by default; Windows docs cover changing locations.

- Open WebUI won’t start? Ensure Python 3.11 and that you used

open-webui serve(package renamed; follow the docs). - Slow responses on CPU: That’s expected with larger models. Try a 1B–3B GGUF or upgrade to a GPU-backed setup (DirectML/WSL2-CUDA depending on your stack).

Is WSL2 worth it in 2025?

Yes, if you want Linux-native tooling with full NVIDIA CUDA performance on a Windows machine. Microsoft and NVIDIA both publish active guides for enabling CUDA inside WSL2, and many advanced LLM stacks assume Linux. For most users though, LM Studio or Ollama + Open WebUI on native Windows is plenty.

Final picks

- Non-technical users: LM Studio → Download → Discover → Run.

- Developers & hobbyists: Ollama (CLI + API) + Open WebUI (UI).

- Tinkerers: llama.cpp + GGUF, optionally via Text-Generation-WebUI.

With the right model size for your VRAM, a modern Windows PC can deliver fast, private LLMs, no cloud required.

Frequently Asked Questions

Which local LLM tool is easiest to use on Windows?

LM Studio is the most user-friendly option for Windows users. It provides a graphical interface, one-click model downloads, and doesn't require any command-line knowledge. Just download the Windows app, pick a model, and start chatting.

Do I need a GPU to run local LLMs on Windows?

While not strictly required, a GPU with at least 8GB VRAM is recommended for good performance. You can run smaller models (3B-7B parameters) on CPU, but responses will be slower. Modern GPUs like the RTX 3060 (8GB) or better provide the best experience.

How much VRAM do I need for local LLMs?

For entry-level use, 8-12GB VRAM lets you run 3B-7B parameter models. 16-24GB VRAM supports larger 8B-14B models. As a rule of thumb, you need about 1.2× the model's file size in VRAM for optimal performance.

Which Windows version do I need?

Windows 10 (recent builds) or Windows 11 are recommended. Both support modern GPU acceleration via DirectML and CUDA. Windows 11 offers better WSL2 integration if you need Linux-based tools.

Can I run these tools completely offline?

Yes, all three recommended tools (LM Studio, Ollama, and llama.cpp) work completely offline once models are downloaded. This makes them ideal for privacy-focused users and environments without internet access.

What is the difference between "instruct" and "base" models?

- Instruct models are fine-tuned for conversation and following instructions. They are the best choice for chatbots and general Q&A. You should almost always start with an "instruct" or "chat" version of a model.

- Base models are the raw, foundational models that are trained on a massive dataset of text. They are good at completing text but are not inherently conversational. They are primarily used as a foundation for further fine-tuning.

How do I check how much VRAM I have on Windows?

You can easily check your VRAM in the Task Manager:

- Press

Ctrl + Shift + Escto open the Task Manager. - Go to the Performance tab.

- Click on your GPU in the left panel.

- Look for the Dedicated GPU Memory value. This is your VRAM.

Can I use my AMD or Intel GPU for local LLMs?

Yes, absolutely. While NVIDIA GPUs with CUDA have historically had the best support, the situation has improved significantly.

- LM Studio and Ollama have good support for AMD and Intel GPUs through technologies like DirectML (on Windows) and ROCm/OpenCL (on Linux).

- llama.cpp also supports various GPU backends, including OpenCL and Vulkan, which can leverage AMD and Intel hardware.

For the best experience, make sure your graphics drivers are up to date.